Getting Started with Semantic Kernel and C#

An AI orchestration and retrieval augmentation generation (RAG) framework for .NET

This article is my entry as part of C# Advent 2023. Visit CSAdvent.Christmas more articles in the series by other authors.

Introducing Semantic Kernel and the Kernel Object

Generative AI systems use large language models (LLMs) like OpenAI’s GPT 3.5 Turbo (ChatGPT) or GPT-4 to respond to text prompts from the user. But these systems have serious limitations in that they only include information baked into the model at the time of training. Technologies like retrieval augmentation generation (RAG) help overcome this by pulling in additional information.

AI orchestration frameworks make this possible by tying together LLMs and additional sources of information via RAG. Additionally, AI orchestration systems can provide capabilities to generative AI systems, such as inserting records in a database, sending emails, or calling out to external systems.

In this article we’ll look at the high-level capabilities building AI orchestration systems in C# with Semantic Kernel, a rapidly maturing open-source AI orchestration framework.

Getting Started

In order to work with Semantic Kernel you’ll need to add a NuGet reference to Microsoft.SemanticKernel and select the latest available release. Note that at the time of this article Semantic Kernel is available in pre-release only under the banner of v1.0.0 Beta 8.

Many of the C# code blocks also assume you have the following using statements in place:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.Orchestration;

using Microsoft.SemanticKernel.Planners;

using Microsoft.SemanticKernel.Planning;

using System.ComponentModel;

using Newtonsoft.Json; // Alternatively: System.Text.Json.Serialization

With these basics covered, let’s get into Semantic Kernel by talking about the kernel.

The Kernel

In semantic kernel, everything flows through the IKernel interface, representing the main interaction point with Semantic Kernel.

To create an IKernel you use a KernelBuilder which follows the builder pattern commonly used in things like ConfigurationBuilder and IConfiguration elsewhere in .NET.

Here’s a sample that creates an IKernel and adds some basic services to it:

IKernel kernel = new KernelBuilder()

.WithLoggerFactory(ConsoleLogger.LoggerFactory)

.WithAzureOpenAIChatCompletionService(settings.OpenAiDeploymentName,

settings.AzureOpenAiEndpoint,

settings.AzureOpenAiKey)

.Build();

This kernel works with one or more semantic functions or custom plugins and uses them to generate a response.

This particular kernel uses a ConsoleLogger for logging and connects to an Azure OpenAI model deployment to generate responses. Note that you can use OpenAI without Azure or even a local LLM with LlamaSharp if you want to.

The kernel isn’t much use on its own so let’s create a function we can invoke.

Semantic Functions

Semantic Functions are templated functions that provide a template to a large language model to generate a response from. In a large project you might have many different semantic functions for the different types of tasks your application needs to support.

Here’s a sample semantic function that trains the bot to think that it is Batman and respond accordingly:

string batTemplate = @"

Respond to the user's request as if you were Batman. Be creative and funny, but keep it clean.

Try to answer user questions to the best of your ability.

User: How are you?

AI: I'm fine. It's another wonderful day of inflicting vigilante justice upon the city.

User: Where's a good place to shop for books?

AI: You know who likes books? The Riddler. You're not the Riddler, are you?

User: {{$input}}

AI:

";

ISKFunction batFunction =

kernel.CreateSemanticFunction(batTemplate,

description: "Responds to queries in the voice of Batman");

This is an example of few-shot prompting where we give several examples of sample inputs and outputs to help the LLM learn the format we prefer.

Also note that the template includes {{$input}} which is a placeholder for the input text that will be provided to the function.

Note: the description here is not required for early examples, but is needed later on when we introduce planners.

Now that we’ve covered defining functions, let’s see how to invoke them.

Invoking Semantic Functions

You can invoke a semantic function by providing the function to the kernel along with a text input.

Here we’ll prompt the user to type in a string and then send it on to the kernel:

Console.WriteLine("Enter your message to Batman");

string message = Console.ReadLine();

KernelResult result = await kernel.RunAsync(message, batFunction);

Console.WriteLine(result.GetValue<string>());

This causes the user’s text to be added to the prompt template and the whole string to be sent on to the LLM to generate a response.

For example, when I type in What’s the current date?, the LLM generated the following response:

Today is the 27th of October, but in Gotham City, we don’t really pay attention to dates. We’re too busy fighting crime and brooding in the shadows.

The kernel took in our request and generated a response via the LLM on Azure OpenAI. Note that while the date is wrong, the tone of the response respected our template.

We’ll talk more about fixing the date response, but for now let’s take a look at how this can go beyond simply calling to an LLM by chaining together functions.

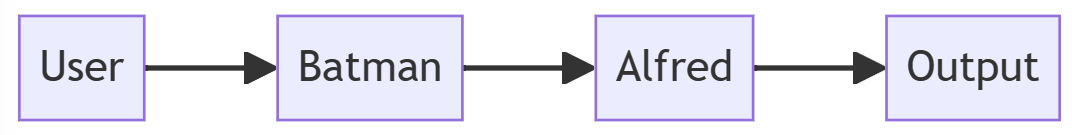

Chaining Together Semantic Functions

Let’s define another new function that acts as Alfred, butler to Batman / Bruce Wayne, and summarizes words that Batman generates.

We’ll do this by defining another template and another ISKFunction:

string alfredTemplate = @"

Respond to the user's request as if you were Alfred, butler to Bruce Wayne.

Your job is to summarize text from Batman and relay it to the user.

Be polite and helpful, but a little snark is fine.

Batman: I am vengeance. I am the night. I am Batman!

AI: The dark knight wishes to inform you that he remains the batman.

Batman: The missing bags - WHERE ARE THEY???

AI: It is my responsibility to inform you that Batman requires information on the missing bags most urgently.

Batman: {{$input}}

AI:

";

ISKFunction alfredFunction =

kernel.CreateSemanticFunction(alfredTemplate,

description: "Alfred, butler to Bruce Wayne. Summarizes responses politely.");

Now, instead of calling this function directly from the kernel like we did for batFunction, we’ll call the kernel with multiple functions in a sequence.

Semantic Kernel will pass our input string to the first function. The results of that will then be sent on to the second function which in turn will generate an output. This effectively forms an AI pipeline.

The code for this follows:

KernelResult result = await kernel.RunAsync(message, batFunction, alfredFunction);

Console.WriteLine(result.GetValue<string>());

If I ask about the current date we get the Batman function’s response interpreted by Alfred:

I apologize, sir, but it seems that in Gotham City, they have a rather unique approach to timekeeping. They prefer to prioritize their noble pursuits of fighting crime and brooding in the shadows over mundane matters such as dates.

Pretty cool! However, it didn’t actually answer our question.

The gap in these responses on the current date can be solved by providing native functions using C# that allow you to provide your own context for relevant entries, such as the current date.

Native Functions and Plugins

When large language models are trained, they memorize the relationships between parts of words over large bodies of text. This process takes a very long time and does not include any facility for updating data after the fact. To solve this, we use retrieval augmentation generation (RAG) to allow our generative AI systems to fetch additional information they need to fulfill a request.

Let’s create a small plugin class that provides information on the current date whenever it is called.

using Microsoft.SemanticKernel;

using System.ComponentModel;

public class DatePlugin

{

[SKFunction, Description("Displays the current date.")]

public static string GetCurrentDate()

=> DateTime.Today.ToLongDateString();

[SKFunction, Description("Displays the current time.")]

public static string GetTime()

=> DateTime.Now.ToShortTimeString();

}

This class contains a pair of static method that are declared as a Semantic Kernel functions via the SKFunction Attribute and includes a text description that will be handy later.

These methods both returns a formatted strings, but they serve different purposes: GetCurrentDate produces the long date (similar to Sunday, November 26, 2023) and the GetTime method returns the short time format, like 11:34 PM.

Note: most native functions you create will take in a string as a parameter. This example is one of the rare cases that doesn’t need to.

Let’s tell Semantic Kernel about these functions as well as our semantic functions by importing them:

kernel.ImportFunctions(new DatePlugin(), pluginName: "DatePlugin");

kernel.ImportFunctions(batFunction, pluginName: "Batman");

kernel.ImportFunctions(alfredFunction, pluginName: "Alfred");

Here the pluginName doesn’t matter, but it is usually helpful to organize your functions by a plugin name in larger applications, so I’m including it here.

This loads these functions into the kernel as an available option for planners to invoke, but the functions are not used yet.

To use the new functions we’ll need to set up a planner and use it to generate and execute a plan.

Planners

Semantic Kernel offers planners as an optional component that plans out a sequence of calls to your semantic functions (those that are just prompt templates) and native functions (.NET code defined in plugin objects) in order to achieve a stated goal.

Planners allow your AI agents to fulfil more complex requests by selecting which steps (or a sequence of steps) to execute and then executing those steps.

We’ll talk about how these planners work internally (because it made me shake my head when I discovered it), but for now we’ll look at how we set up a simple one.

Let’s start with the simplest planner: the ActionPlanner. This class takes the stated goal and selects the most applicable function and executes it.

// Set up the planner

ActionPlanner planner = new(kernel);

// Generate a plan

Plan plan = await planner.CreatePlanAsync(goal: "What is the current date?");

// Execute the plan and get the result

FunctionResult result = await plan.InvokeAsync(kernel);

Console.WriteLine(result.GetValue<string>());

When the ActionPlanner executes, it determines that calling our GetCurrentDate is the most applicable function to invoke and so it displays:

The current date is Saturday, November 25, 2023

On the other hand, if you have it create a plan to serve the goal “Tell me about Bruce Wayne”, it will route that request to the alfredFunction and generate the response:

Ah, the esteemed Mr. Wayne has graced us with his presence. How may I be of service to you today, sir?

While the response isn’t perfect, you can clearly see that the ActionPlanner routes the request to the function most likely to generate a good response.

So how does this work?

How Planners Work

Planners in Semantic Kernel at first glance seem a little magical. They’re able to take a string with a question or some stated intent and match it to a supported function to generate a plan.

This sounds simple, but this implies some sort of reasoning or understanding about what whatever is written in the string.

It turns out, planners in Semantic Kernel make calls to LLMs to generate their plans. The actual details can be verbose and vary by which planner you’re using, but tend to follow the following steps:

The planner starts by building a string that orients the LLM and tells it that it wants it to form a plan to solve the goal the user has stated.

The planner then iterates over each function that was registered in the kernel. For each function, the planner will create a string based on the name of the function, the description you provided for the function, any parameters to that function, and descriptions of those parameters. This resulting string is then added to the growing description of the request.

Finally, the planner asks the LLM to select which function or functions should be invoked and how each function relates to each other (more on this later). This part also stresses the exact format the LLM should use in its responses.

The request is then sent to the LLM which then returns a response hopefully indicating a valid plan to solve the goal.

Put simply, planners take the user’s request, add in context about available functions, and then ask an LLM to tell it what the next step is.

Sometimes the LLM will send back a response that isn’t syntactically valid. In these cases most planners will send back corrections and try again. This can result in a number of calls to the LLM to generate a plan from a large body of text before a valid plan is discovered or the planner aborts its attempt due to too many attempts being sent to satisfy the request.

As you might imagine, these requests can take a bit of time and also use a higher than average amount of tokens. However, they can be remarkably effective when things work.

Now that we’ve covered how ActionPlanner and planners work overall, let’s look at a more capable planner: the Sequential Planner.

Sequential Planner

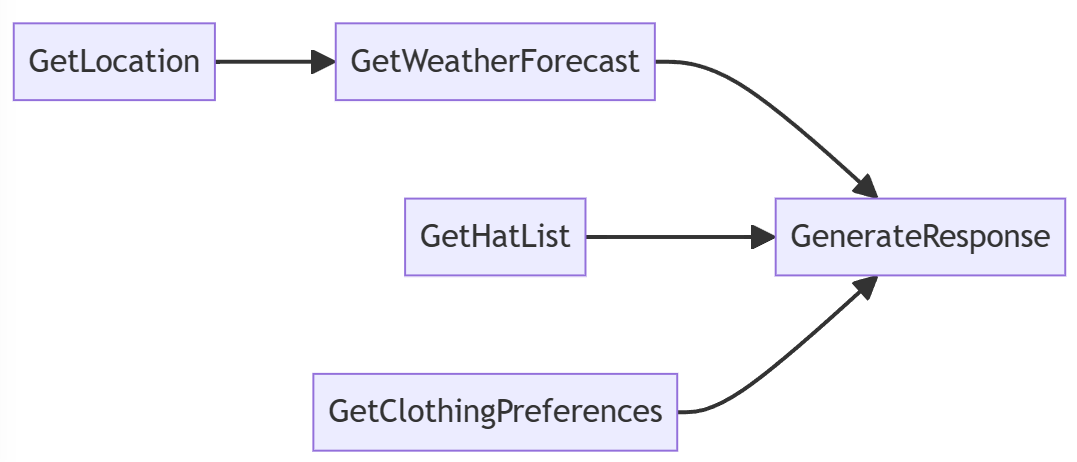

While an ActionPlanner will only pick a single action to execute, the SequentialPlanner looks to generate a sequence of function calls to satisfy the stated goals. This allows the SequentialPlanner to fulfil more complex requests than an ActionPlanner can.

For example, I’m working on a more complex project at the moment that knows how to respond to requests like “Which hat should I wear tomorrow morning?”.

No LLM has enough information on my weather forecast or my wardrobe to be able to accurately answer this question. Additionally, the ActionPlanner would only be able to handle this question by selecting a single function to invoke.

The SequentialPlanner would be capable of handling this problem, however. It might solve this problem by making a series of calls to native functions and semantic functions as shown here:

- Determine my current location

- Get the weather forecast for my location

- Get a list of hats that I have

- Load a data on my general clothing preferences

- Generate a response based on my wardrobe, preferences, and weather forecasts

Let’s see an example of the SequentialPlanner by having Alfred describe the current time:

SequentialPlanner planner = new(kernel);

Plan plan = await planner.CreatePlanAsync("Have Alfred tell me the current time");

FunctionResult result = await plan.InvokeAsync(kernel);

Console.WriteLine(result.GetValue<string>());

The response I get back in this case is:

Apologies for the interruption, sir, but it appears that the current time is 11:42 PM.

I should note that that actually is the correct local time as I write this (I’m a night owl; This is normal).

In this case the SequentialPlanner accomplished this by connecting the GetTime and Alfred functions together to generate a cohesive response.

However, more often than not I find the SequentialPlanner disappointing. Sometimes it fails to find a valid plan when one should exist and a SKException is thrown. Other times it generates what it thinks is a valid plan, but you see odd bits of JSON in the output that doesn’t make much sense.

These issues generally stem from the fact that the SequentialPlanner tries to do all of its planning up front in a single call to the LLM. The JSON this planner has the LLM generate is much more complex than what the ActionPlanner needed and as a result there are sometimes issues with the plans generated by the SequentialPlanner.

This is also a good time to mention that Semantic Kernel is still in beta as of the time of this writing and is rapidly changing and evolving.

Let’s look at a more advanced planner that I find generally performs better: the StepwisePlanner.

Stepwise Planner

While the SequentialPlanner tries to generate a perfect sequence of steps to fulfil the user’s request, the StepwisePlanner instead tries to determine which function should call next, execute that function, and then make another call to determine if the response is ready to deliver or if another function needs to be called.

To put things in project management terminology, SequentialPlanner is waterfall that attempts to fully understand the problem and its solution, build the perfect plan, and then execute that plan. StepwisePlanner, on the other hand, would be agile development methodologies, making one small decision and then finding the next decision to make after that.

The code for working with a StepwisePlanner is almost identical to working with a SequentialPlanner except for a minor difference:

StepwisePlanner planner = new(kernel);

string goal = "Tell Batman the current time and have Alfred summarize his response";

Plan plan = planner.CreatePlan(goal);

FunctionResult result = await plan.InvokeAsync(kernel);

Console.WriteLine(result.GetValue<string>());

First, we use the StepwisePlanner instead of SequentialPlanner as you’d expect. Next, note that the CreatePlan call is synchronous rather than asynchronous.

This sounds nice, but I’d rather have all of the Planner classes conform to a common way of interacting with them so it would be easier to swap one planner out for another. The current way that you work with a planner and get details on its results varies from planner to planner. Function invocations that work with one planner don’t always work with another one. This is something I hope Semantic Kernel fixes before they officially release.

With the minor syntax difference in this example out of the way, here’s how the StepwisePlanner responded to the request to have Alfred summarize Batman’s response to the current time:

According to Batman, the current time is the perfect moment for him to protect the city and strike fear into the hearts of criminals. He suggests that I take some rest, as the pursuit of justice can be postponed until morning.

Well, that’s interesting. It doesn’t mention the current time there, but that could have been omitted by the summarization.

Thankfully, the result object includes a Metadata Dictionary that the StepwisePlanner puts a stepsTaken key in with some JSON representing the series of steps taken and the reasoning the StepwisePlanner included the step.

The following code deserializes this JSON into an array of objects and then writes out the thoughts and observations from that action:

using Newtonsoft.Json;

public class StepSummary

{

public string? Thought { get; set; }

public string? Action { get; set; }

public string? Observation { get; set; }

}

string json = result.Metadata["stepsTaken"].ToString();

StepSummary[] steps = JsonConvert.DeserializeObject<StepSummary[]>(json);

foreach (StepSummary step in steps)

{

Console.WriteLine($"Thought: {step.Thought}");

Console.WriteLine($"Observation: {step.Observation}");

Console.WriteLine();

}

The result I see is:

Thought: To tell Batman the current time, I can use the DatePlugin.GetTime function. Then, I can use the Batman function to relay the time to Batman and get his response. Finally, I can use the Alfred function to summarize Batman’s response, including the time in his response. Observation: 12:01 AM

Thought: Now that I have the current time, I can use the Batman function to relay the time to Batman and get his response. Observation: I think it’s the perfect time for me to patrol the streets and strike fear into the hearts of criminals. But for you, it might be a good time to catch some Z’s. Crime-fighting can wait until morning, citizen.

Thought: Now that I have Batman’s response, I can use the Alfred function to summarize his response, including the time in his response. Observation: Master Wayne suggests that it is an opportune moment for him to venture out into the city and instill terror in the hearts of wrongdoers. However, for your well-being, it may be advisable to retire for the night and get some rest. The pursuit of justice can be postponed until the morning, my good citizen.

Looking at the chain of steps and the reasoning and observation at each step, it looks like the following sequence of events occurred:

- The

StepwisePlannerrequested the first step from the LLM and was told to call theGetTimefunction - Semantic Kernel called the

GetTimefunction and got back12:01 AM(the correct value) - The

StepwisePlannercalled the LLM with this data and the initial objective and sent a message on to the Batman semantic function. - The Batman semantic function used the LLM to generate a response as Batman. Batman responded in typical bat-fashion, but did instruct the caller to go to sleep since it’s late.

- The

StepwisePlannercalled the LLM again which decided to have the Alfred function summarize Batman’s response. - The Alfred function used the LLM to generate a summarized response, which the LLM decided was fine to show to the user.

In other words, the sequence of steps executed perfectly, but the exact time wasn’t included in the final response due to several layers of summarization. If we wanted the time to be present in the final response, a more explicit prompt might get that result.

Conclusion

In this article I gave you a basic overview of using Semantic Kernel in C# to chain together different capabilities. While our bat-example was somewhat silly, a real example might include native plugins that call out to external APIs, query databases, or even take actions on behalf of the application.

Semantic Kernel is still growing and will change over time, but there’s a lot to be excited about. I know a few new planners will be delivered soon and I’m eager to try them out.

This article doesn’t even touch on the topic of semantic memory or the ability for the kernel to search text embeddings or store knowledge as text embeddings in a memory store. This is a tremendous capability that can significantly improve the performance of generative AI systems.

Additionally, I didn’t have room to cover the emergence of assistants, which allow you to structure larger generative AI systems into modular assistants which have a limited amount of functions and are easier to maintain and use with planners as a result.

I’m also eagerly waiting to see how PromptFlow will support C# Semantic Kernel application testing, because prompt engineering is a very real concern you face with generative AI systems and having a robust way to measure your systems is important.

Despite these shortcomings, Semantic Kernel is now my preferred way of building generative AI solutions and I hope you share the eagerness I have to see the technology evolve.

If you’d like to learn more about Semantic Kernel, you can look at my larger BatComputer project which aims to offer a cross-platform Semantic Kernel experience in a rich console app aimed at helping me with some of my daily tasks and teaching Semantic Kernel. I’ll also be speaking more on Semantic Kernel in the future, including a conference talk at Global AI Conference and a 4 hour workshop at CodeMash 2024.