Managing your OpenAI on Azure Costs

Understand and predict your OpenAI on Azure Expenses

Let’s talk about pricing for OpenAI on Azure. In this article we’ll explore the token-based pricing OpenAI offers, the price differentials of the various models, what embeddings are and how their prices differ, and some general tips for making the most of your OpenAI spend.

At the time of this writing, OpenAI on Azure is still in closed preview and you likely haven’t gotten to play with it yet. Therefore this article is more focused on the pricing structure so you can understand the choices you’ll need to make.

Before we get started, I should issue my standard disclaimer on pricing: this article is based on my current understanding of Azure pricing, particularly in the North American regions. It is possible the information I provide is incorrect, not relevant to your region, or may change as OpenAI gets closer to leaving preview. While I make every effort to provide accurate information, I provide no guarantee or assume no liability for any costs you incur. I strongly encourage you to read Microsoft’s official pricing information before making pricing decisions.

Azure OpenAI

Let’s start by talking about what OpenAI on Azure is and why you’d want to use it on Azure.

First, OpenAI is a research lab that has produced a number of artificial intelligence models including GPT-3, Dall-E, and ChatGPT, among others.

Using OpenAI’s models, developers can generate text and image predictions from a string prompt, such as “Write a poem about an army of squirrels” or “How do I declare a variable in C#?” (admittedly, two very different prompts).

If you’ve seen ChatGPT in action, you’ve seen an OpenAI model in action and have some idea of what I’m talking about because ChatGPT is actually a variant of the GPT-3.5 model.

Next, let’s address the elephant in the room: Why OpenAI on Azure when OpenAI already exists as a separate service?

There are a few reasons for this. First of all, OpenAI on Azure allows you to run your models in the same region as your other production code. This allows you to improve performance and eliminate the need for your data to leave that region of Azure if security is paramount to you.

Secondly, Azure already has a powerful amount of infrastructure and services behind it and using those same services to secure, control, and manage your expenses for OpenAI is going to be popular for many organizations.

Third, in the long-term it likely will become be more convenient for developers familiar with working with Azure to work with the OpenAI resources on Azure than figure out what they need to do to integrate with OpenAI outside of Azure.

And finally, having these models in Azure allows us to take advantage of Microsoft’s scalability and resiliency with their existing data centers throughout the world.

Now that we’ve covered what OpenAI is and why we’d want it on Azure, let’s talk about how pricing is structured and what it can do.

Pricing for OpenAI on Azure

Like with many other offerings on Azure, including AI offerings like Azure Cognitive Services, OpenAI services are billed at a per-usage model, meaning you are billed only by the API calls you make.

These API calls fall into one of several territories:

- Language Models (including Text, Source Code, and ChatGPT)

- Fine-Tuning Models

- Embedding Models

We’ll talk about each one of these in turn: what it is, when you would use it, its pricing model, and things to be aware of.

Language Models

Language models are transformer-based models that were trained over large bodies of data and then made available to the public for consumption.

These models take awhile to train and validate and so new models are released periodically after they are vetted.

Because OpenAI is constantly looking for ways to expand its models and improve their performance, this means that the older models tend to perform worse than the newer ones. Additionally, the size of these models has grown significantly over time, meaning the newer models are more expensive to host and may also take additional time to generate a response.

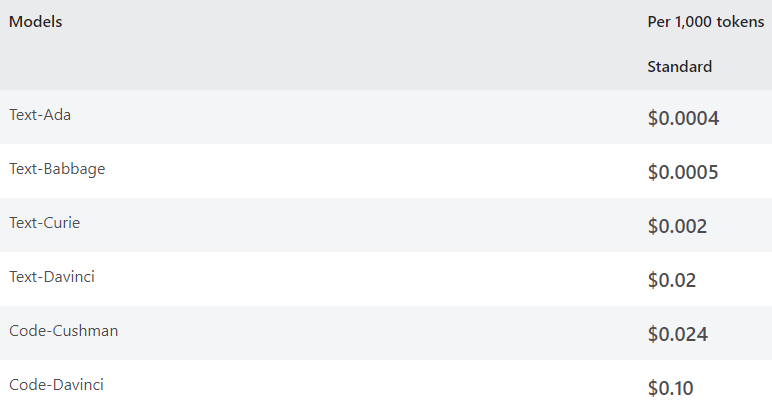

Because of these factors, the pricing for models depends on the model you want, with the older models such as Ada and Babbage being extremely affordable and the more recent models like Davinci costing more but being significantly more capable and human-like in their responses.

Text Models & Tokens

To be specific, these text models are priced based on tokens. Tokens are individual words and symbols (such as punctuation marks) in the prompts sent to the model and the responses generated from the model.

For example, the string int i = 42; could be said to have the following tokens: `[“int”, “i”, “=”, “42”, “;"].

You may think that punctuation counting as tokens is unfair, but it’s part of the technical details of how these models work and how they know to generate roughly grammatically correct sentences and even source code.

Typically when you call out to OpenAI services, you specify the prompt as well as the maximum tokens to generate. The model will then generate up to that maximum count of tokens and return it back to you. You are then billed based on your consumption of the model.

Check Microsoft’s official pricing information, but at the time of writing this, calling out to OpenAI services was very affordable at the per-token level.

My advice to you is to prototype your applications using cheaper models like Text-Ada and periodically validate that they perform well with the other models. As your new offerings get closer to release, your organization should perform a cost / benefit analysis to determine whether cost or quality is the driving factor and choose the appropriate model based on these factors.

I also recommend you keep your token limit reasonable to prevent replies from your model from being needlessly long and quickly racking up costs.

Finally, if you believe you will have frequent duplicate queries from users, you may want to invest in some form of caching where if a prompt resembles an existing prompt, you can reply back with the previously-generated reply instead of generating the response tokens again. Of course, because human language is so flexible it makes it significantly less likely that two people will use the exact same string, so caching becomes less effective the more varied your inputs are.

I’m currently thinking through a hybrid caching layer that would take advantage of capabilities in Azure Cognitive Services but use OpenAI on Azure to generate responses. Stay tuned for some future content on that interesting experiment.

Code Models

Code models are very similar to text models, except they’re built for source code.

Because these models are newer and far more specialized than generic text models, their price is significantly more expensive per token.

Currently there are two dedicated code models available: Cushman and Davinci that respectively cost 2.4 and 10 cents USD per 1,000 tokens.

Since code is very dense on symbols and symbols are tokens, this cost can add up fairly quickly so I would recommend starting on Cushman and getting a sense of the quantity of tokens you’re generating your first few calls and then scaling things up as needed.

This is another scenario where you need to evaluate the quality of the output you’re getting and determining what pricing makes sense given your quality needs and volume demands.

ChatGPT

Closing out our text models is ChatGPT, which just released on OpenAI a few days ago at the time of this writing.

According to Microsoft’s press release ChatGPT is priced at $ 0.002 USD per 1,000 tokens.

This makes the ChatGPT service as affordable as the Curie text model and more affordable per token than any current code model available. However, ChatGPT can be fairly verbose and so you may find your token count accumulating faster than you expect, so do some simple validation tests before scaling up your usage.

Image Models

Because image models generate output images instead of a series of tokens, their pricing is much simpler: images are priced at the per-image level, regardless of what prompt you gave the model.

As of the time of this writing, Dall-E images cost $2 USD per 100 images generated or 2 cents per image. Of course, check the pricing yourself before working on this assumption.

For comparison, I currently use MidJourney to generate images (though plan on changing to Dall-E soon) and I would need to generate 500 images on Dall-E in a month to reach the minimum pricing plan for MidJourney. Of course, these are two different services with different strengths, weaknesses, and ways of using them, but hopefully that comparison is helpful to you.

Fine-Tuned Models

If you choose to fine tune your models, there are a few additional charges that will apply to you.

The first decision you make is likely the most critical: you need to determine which base model your fine-tuned model will be built on top of. This impacts the overall quality of your trained model and also significantly impacts your pricing during training, hosting, and prediction.

Let’s talk about each one of those 3 costs in more detail.

Training a Fine-Tuned Model

Your first expense in this process will be paying for the time spent actively training your model. This is the process of fine-tuning the model to adapt to customized data you provide.

The amount of time taken will depend on the training data you send to Azure. The more data you provide the more your model can tailor itself to your cases, but the longer training will take as well.

At present the training time is billed at a per-compute hour basis for training and prices range from $20 to $84 per hour, depending on the base model you select. Similar to token generation above, the simpler models are cheaper per hour compared to the more advanced base models.

Because training can be a major commitment, I recommend you determine which base model you want before experimenting with fine-tuning a model, since every time you evaluate a different model you will need to train a fine-tuned model based on that base model.

Deploying a Fine-Tuned Model

Once your model is trained, you will need to deploy it. At this point you pay for active hosting at an hourly basis, just like you would if you deployed a trained model from Azure Machine Learning. Like before, the hosting costs will be dependent on the base model you selected.

At present, hosting is a per-hour expense that ranges from 5 cents an hour to 3 dollars an hour, depending on your base model.

Generating Predictions from a Fine-Tuned Model

Finally, after a model is deployed, standard usage costs still apply in the form of the per-thousand token costs.

At present, these costs are identical to the costs for the base models. This means that if you fine-tune a model, your added costs for that customization from the training and deployment process, but you still pay usage costs for generating tokens from these models.

Due to this fee structure, I would only consider fine-tuning a model if I had a critical case that the base models were insufficient for. That said, fine-tuning a model gives you additional control and the ability to deploy something unique to your organization and its users, and there can be a lot of value in that.

Embedding Models

Embedding models are more complex to describe. Microsoft Learn has a full article going into more detail on the topic, but embedding models essentially gives you access to the similarities between different pieces of text.

The associations that embeddings can provide will allow you to find related pieces of text. This can be used to power recommender-based systems, suggest things to users, and find associations between concepts.

Like text-based models, embeddings have a standard per 1,000 token pricing structure that varies based on the base model you chose.

Conclusion

The underlying theme with OpenAI on Azure is that you must make a decision on where you fall between optimizing for lowered costs and optimizing for higher quality of generated content.

While some services are paid at the per-call level or per-hour, most things with OpenAI are billed at the token-level. This means that if you need to send OpenAI a lot of text or you need it to generate verbose answers, you will be paying significantly more over time than a system that only needs simple questions and answers.

I’d encourage you to do some targeted experimentation and internal testing to see what level of quality your organization finds acceptable and how justifiable the price for that level of service is to your business.

Once again, OpenAI on Azure is currently in preview at the time of this writing, and I’ve only just gotten access to it myself as an AI MVP. It’s quite possible the information contained here is incomplete or may change by the region or as the service gets closer to public preview and release. You are strongly encouraged to look into pricing yourself and actively monitor your spend to prevent unexpected surprises in your Azure bill.

You can use pricing calculator to forecast potential cost scenarios. Azure Cost Analysis will help you see where your money is going and make adjustments over time. Azure Cost Alerts will help you detect cost overruns so you can make adjustments, and Azure Budgets can automatically make adjustments based on your observed expenses.

All of these services together allow you to create a scalable and secure AI solution based on OpenAI’s cutting-edge models with the full power of Azure’s cost management solutions.

This article was part five of a five-part series exploring AI / ML pricing on Azure as part of the larger community effort behind Azure Spring Clean 2023. Previous entries talked about Azure Machine Learning, Azure Cognitive Services, Azure Bot Service, and Azure Cognitive Search.