What Does ChatGPT Mean for New Software Developers?

Answering common questions from those entering the software development industry

I spend my days teaching new developers how to code. My evenings and weekends, however, belong to my study of data science, artificial intelligence, and conversational AI.

Recently I’ve watched these two worlds crash together with the sudden revelation of OpenAI’s ChatGPT chatbot and the incredible things it can (and can’t) do.

In this post we’re going to explore what ChatGPT is and what it means for current and future software engineers.

What is ChatGPT?

ChatGPT is a new conversational AI chatbot developed by OpenAI that reached public visibility in late November of 2022. Unlike traditional chatbots, ChatGPT is able to take advantage of advances in machine learning using something called transformers, which give it a greater contextual awareness of the documents it has been trained on. This allows it to generate responses that mimic those you might see from a human.

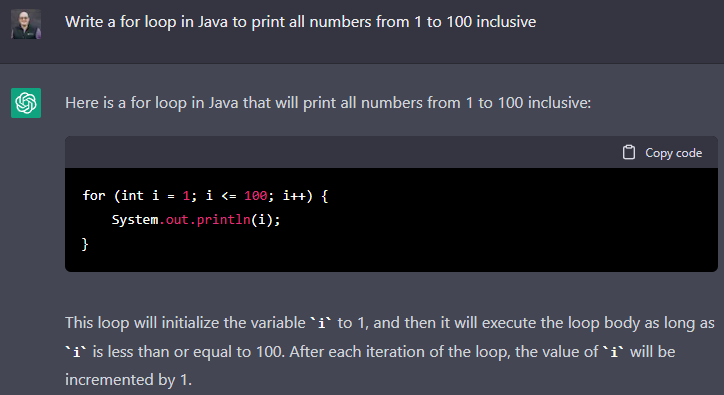

What makes ChatGPT different from anything that has come before is its breadth of responses and its ability to generate new responses that seem to have a high degree of intelligence behind them. This means that I can ask ChatGPT to tell me stories, outline an article, or even generate code and it will give me something that looks convincing and may even be usable.

However, ChatGPT is not human-like intelligence, though it’s the closest I’ve ever seen a computer come to emulating it.

What ChatGPT Can’t Do

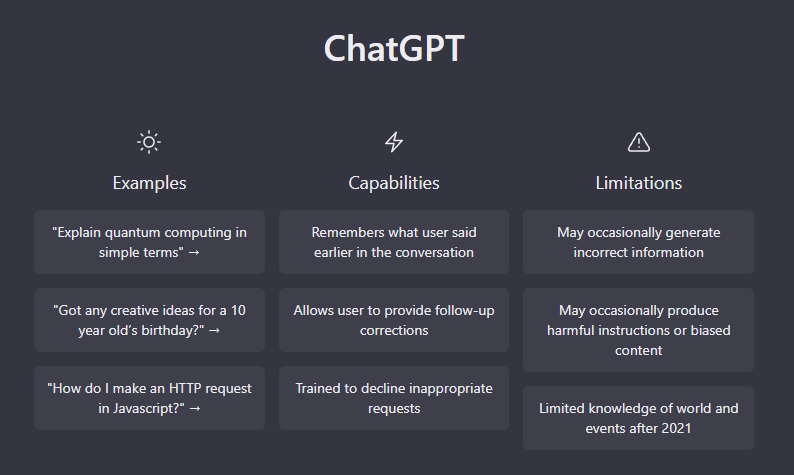

ChatGPT is open about its limitations and displays it on its landing page:

ChatGPT’s intelligence is in its contextual awareness in conversation and the breadth of information it’s been trained on. But this is historical information.

I’ve certainly seen ChatGPT make mistakes. It’s given me factually incorrect information about software libraries, has even made up libraries that don’t exist and referred me to libraries that have long ago been retired.

ChatGPT is not human and lacks the ability to:

- Understand that what it generates may be incorrect (or communicate its lack of confidence in its answers)

- Understand the emotional / practical needs of humans

- Understand the basic characteristics of our world

As a humorous example, I gave a conference talk this year entitled: “Automating my Dog with Azure Cognitive Services.” It was a fun exploration of using artificial intelligence to recognize objects in images, generate speech responses from text, and interpret human text.

When I asked ChatGPT how it would structure that talk, it gave me a beautiful outline that was perfect … except it suggested that artificial intelligence could listen to my dog’s spoken words and respond appropriately. For all of its intelligence and impressiveness, it failed to grasp the basic fact that my dog cannot speak English.

It’s incredible that ChatGPT can generate content for you, but that comes with some limitations as well. First of all, ChatGPT is not truly synthesizing new things. Instead, it is arranging things it has encountered before in creative ways.

It may be arranging aspects of the English language, story structures, or answers it sees posted in locations it deems trustworthy, but it is arranging content and structure that it knows about already in new ways.

This means that if we stop creating new articles, stories, programs, and works of art, ChatGPT and systems like it will not be inventing anything new on their own.

Another severe limitation of transformer-based systems like ChatGPT is that they are very hard to understand how they came up with content. This means that if ChatGPT generates a response, that response may be a word-for-word quote from someone else and you wouldn’t even know.

However, ChatGPT and systems like it can generate some very good starter code and give you answers that are often more helpful than they are not.

Will People Still Need Software Developers?

Since transformer-based systems are only five years old at this point, it begs the question: in five more years, will we need developers at all?

Let me tell you a little secret: since I’ve been a programmer, people have been talking about no-code and low-code approaches to software development that remove those pesky software developers from the equation.

Thus far, none of these systems have delivered on their promise. This is because software development is an incredibly complex field that requires you to think about many different aspects of development including:

- Meeting the business needs

- Quality / testability

- Speed of delivery (deadlines, project plans, etc)

- Ease of maintenance

- Performance as the number of users or volume of data scales up

- Security

- Explainability

It turns out that in order to meet the varying and competing requirements of a software project, you need to be able to understand people, balance those competing concerns and deliver a creative solution that meets those needs in both short and long term ways.

Because ChatGPT doesn’t actually understand the content it generates, it has no idea if its responses will work or are relevant to all of your business needs.

Because the code ChatGPT generates is based on code it has encountered before, it cannot make any guarantees that the generated code:

- Is free of bugs

- Is well-documented

- Is easy to understand and maintain

- Is free of security vulnerabilities

- Meets the entire requirements set out by the business

- Is not an exact duplicate of code encountered on the internet

- Performs adequately at scale in a production environment

Perhaps most crucially, ChatGPT cannot modify code that it has previously authored or understand large solutions and modify them as needed.

This technology is only five years old, and it will evolve and become more transparent, easier to control and even more useful in the future, but it will never:

- think for its own,

- understand your business context,

- or understand the humans involved with and invested in your system.

Even if a successor to ChatGPT overcomes many of these limitations, you still need someone with a deep technical understanding to be able to tell it what to do and evaluate the quality of its output.

How can ChatGPT help me on my Journey into Tech?

Instead of replacing developers, I see ChatGPT, GitHub CoPilot, Amazon CodeWhisperer, and systems like these (as well as those that follow) as new tools in the developer’s tool belt.

These code generation tools are good at generating basic “boilerplate” code that can then be refined and modified by a professional developer to meet your needs.

However, as a new learner, I’d encourage you not to use ChatGPT excessively to generate code. This is because you are still building the mental muscles needed to generate for loops, methods, and variable declarations. You are still learning to think critically about things to build your own understanding.

At work, we move quickly and I have seen a few students resist the requirements of daily homework practice thinking that it was beneath them or not worth their time—only to discover that by skipping this work they had not developed the skills and understanding necessary for the more complex aspects of software development. I see ChatGPT offering this same temptation to new learners.

I’m not saying that ChatGPT is dangerous or to be completely avoided. I’m saying that I would use it as a refresher on concepts you’ve already learned and only use it to generate code as a last resort. In fact, I’d recommend seeking help initially from a human instead of ChatGPT, especially when it comes to code that doesn’t work.

Using ChatGPT before you’ve internalized the mechanics of programming is like asking a preschooler to learn to ride a bike by giving them a motorcycle instead of a tricycle or bike with training wheels.

ChatGPT can be incredible, but you are going to be far better off finding a qualified developer to mentor you early on in your journey instead of relying on its code. Not only can a human understand you and tailor their answers to your experience, needs, and emotional state, but frankly you won’t even know the right questions to ask an AI system until you have enough experience.

ChatGPT as a Tool for Software Engineering

As a seasoned developer I know many things about programming and have the syntax and methods I use on a regular basis committed to memory. However, there are infrequent tasks that I often need to search for a quick refresher periodically.

In this regard, ChatGPT meets my needs quite nicely as an alternative to Google. ChatGPT generates simple code for me to use as reference (not to copy / paste) and refresh myself on its syntax.

Additionally, ChatGPT is invaluable when using a new library or language. I might know how to do a for loop in one programming language, but forget the syntax in another language. ChatGPT helps me transfer my existing knowledge more easily between languages.

Ultimately, ChatGPT and the systems that follow it signal the advent of new code generation tools we’ve not seen before. These tools are incredibly powerful and appealing, but they require the guidance of a trained professional. Learning to program will help you know when, where, and how to use this tool to build the best software you can.

Personally, I’m incredibly excited to see where the future takes us and see if we can improve and harness these incredible tools to build even better things as software engineers.

This post was originally published elsewhere on December 19th, 2022.