Building a Digital Dungeon Master with Semantic Kernel, C#, and Azure

Build a Solo RPG Dungeon Master using C# and Azure OpenAI

As a kid, I was fascinated by the possibilities of table top role playing games like Dungeons & Dragons, Shadowrun, and Mechwarrior, but rarely got to play them, aside from some combat encounters my brother and I set up and executed. Fast forward a few decades and I’m building software and AI solutions professionally with Leading EDJE, and one of my coworkers decided to start a RPG night.

I was hooked immediately at the idea of playing a character, exploring a game world and a game master’s story, and exploring the mechanics of a game system with characters and a setting that evolved as sessions went on. And then I met one of the hardest parts of these games: scheduling play sessions everyone can attend. I simply didn’t want to wait a few weeks between sessions, and so I got a bright idea: I could build my own virtual dungeon master and use it in the weeks between sessions to explore different settings and character options.

So I did. This article walks you through my approach of using Semantic Kernel, C#, and services in Microsoft Azure to build a digital dungeon master capable of handling simple gameplay scenarios.

Note: this article is intended for hobbyist use and doesn’t assume any ownership of properties claimed by Wizards of the Coast, Hasboro, or other publishers. I strongly recommend you purchase any game system you seek to emulate with AI.

Scoping the Problem: the role of a Digital Dungeon Master

Every role playing game system has its differences in terms of settings, rules, character progression, skills and attributes, and combat. This means that a dungeon master for a game like Dungeons and Dragons 5th Edition is going to be different than a dungeon master for GURPs or Traveller or Pathfinder 2nd Edition, or any number of games.

However, at their core, dungeon masters - typically a job occupied by a dedicated human player - do the following things:

- Begin a gameplay session with either an initial hook or a recap from last session

- Ask the players what they’d like to do in given circumstances

- Describe how the game reacts to the actions of the players (frequently involving describing the world or giving words to non-player characters)

- Presenting the players with mechanical challenges such as making them roll to see if they succeed at something

- Triggering combat encounters when appropriate

- Provide clarifications on points of confusion

- Bring each session to a close

Being a dungeon master is hard work and it’s as much of a social challenge as it is a mechanical or storytelling challenge. A truly gifted dungeon master will always be better than a digital dungeon master, but other players are not always available, or you don’t always have hours available for long play sessions.

My solution to this is to build a digital dungeon master (DDM) that can interpret player commands by responding to them with additional text and directives based on the story being told and the mechanics of the game’s ruleset.

To help illustrate this, here’s a short interaction between myself playing a human artificer stuck on an alien world and my digital dungeon master:

Player: I’d like to examine the alien plant.

DM: You sense that it possesses unique properties based on its unusual appearance and the faint aura it emits. It’s likely that it may be valuable, but without further experimentation or study, you cannot determine exactly how it could be used.

Player: I’d like to cut open a leaf of the plant with my dagger and then taste that small part of the leaf

DM: You carefully cut open a leaf of the herb with your scraggle dagger and take a small taste. The flavor is earthy and slightly bitter, with a hint of sweetness that lingers on your tongue. As you taste it, you start to feel a mild sensation—almost as if your body is subtly reacting to the herb. Please make a Constitution saving throw to determine how your body reacts!

Player: I rolled a 9 with my modifier. What sort of sensation?

DM: With a roll of 9 on your Constitution saving throw, you feel a mild discomfort as the flavor settles in your stomach. The bitterness seems to be a little stronger than you’d anticipated, and you’re starting to feel a slight wave of nausea as your body processes the herb. Take 1 point of damage.

This short interaction shows how the system functions. It describes what’s going on, gets actions from the player, and describes the immediate results of those actions. In this example, I did something adventurous and tasted an unknown plant, which could have had negative effects. To see how this worked mechanically, the dungeon master requested I make a standard dice roll for the ruleset I was using.

When I reported my result, the dungeon master then interpreted that result and told me how it impacted the story.

Note here that in this example the dungeon master isn’t making the row for me, tracking my constitution score or health or other stats, they’re just fascilitating a story. As a player, I have agency to roll dice, make decisions, and track the results in however way I’d like.

I want to stress this because the role of my digital dungeon master is really that of a storyteller and fascilitator, not a specific implementation of a specific game engine. When combat encounters occur, the digital dungeon master tells me what creatures I’m entering combat with and provides their basic stats, but I resolve the combat using miniatures or virtual markers in other software.

Couldn’t you just do this with Chat GPT?

If you do a search online for something like solo dungeons and dragons, D&D AI, or other topics, you’ll find a number of posts from people who have tried to use large language models (LLMs) like Chat GPT as dungeon masters.

Such a system typically involves a system prompt like this one:

You are a dungeon master directing play of a game called Basements and Basilisks. The user represents the only player in the game. Let the player make their own decisions. Ask the player what they’d like to do, but avoid railroading them or nudging them too much.

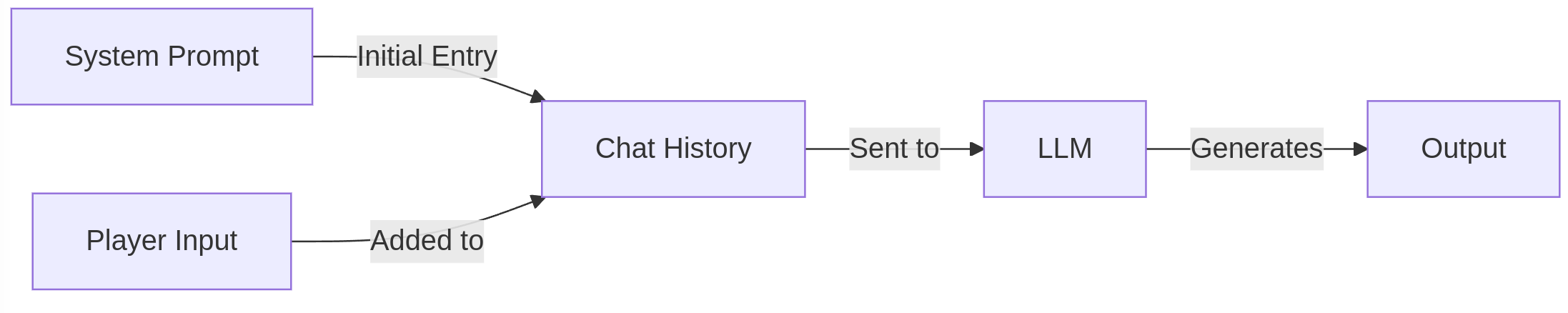

These prompts give the system basic flavor and instructions to customize the play experience. With the system prompt in place, it and the player input are sent to the LLM in order to generate a response as illustrated here:

These posts on using LLMs for gaming all highlight some common problems with this system that I experienced as well when prototyping:

- The LLM doesn’t tend to ask your character to perform skill checks (or gets the names of skills / attributes wrong when they do)

- The LLM loses track of prior game state in long sessions or when backtracking

- The LLM doesn’t challenge the player enough with setbacks

- The LLM takes actions on your character’s behalf that you didn’t want it to take, thus taking away some of your agency

The core problem people run in to is that LLMs were largely trained on large bodies of text, including stories and articles. While I imagine some gameplay session transcripts of various role playing games were included, these are undoubtably the minority.

Because of this, the output of an LLM is going to be biased towards non-interactive things, such as the dungeon master describing your character doing a great many things, the LLM simply saying “Yes, you succeed” and not challenging the player, and the system forgetting to do basic things like ask for skill checks.

The backtracking problem is tougher, because while LLMs can be good at describing things, if they lose track of how they described something before, they may describe it wildly differently if you ever backtrack into an area, because they have no persistent knowledge into the world they are “creating” for you.

So, Chat GPT and other solutions are a good start to solving this problem, but they’re not the full solution. For that, we need something to tie together a large language model with other sources of truth: AI Orchestration

AI Orchestration and Dungeon Mastery

AI Orchestration is a means of tying together AI systems with external sources of data and even potentially actions to invoke. These data sources can be things like live results from APIs, indexed knowledge base contents, or the results of pre-defined SQL queries against a database you control.

Actions could be something like creating a troubleshooting ticket in response to a customer message, or initiating some other process - typically the first step on a workflow that eventually has human review and approval involved.

AI Orchestration vs Retrieval Augmentation Generation (RAG): You may be familiar with systems that use RAG for fetching data sources and AI orchestration may sound a lot like them. That’s because it is. However, while RAG is typically focused on a single data source of a single type of data, AI orchestration systems may orchestrate multiple instances or even types of data sources - or trigger additional actions as described above. You can think of AI orchestration as a more complex variant of RAG with additional capabilities.

For my digital dungeon master, the data sources of note were information on the player character, information on the story world, a list of skills and attributes defined by the ruleset I was playing under, a recap of last session, and other potentially relevant pieces of information.

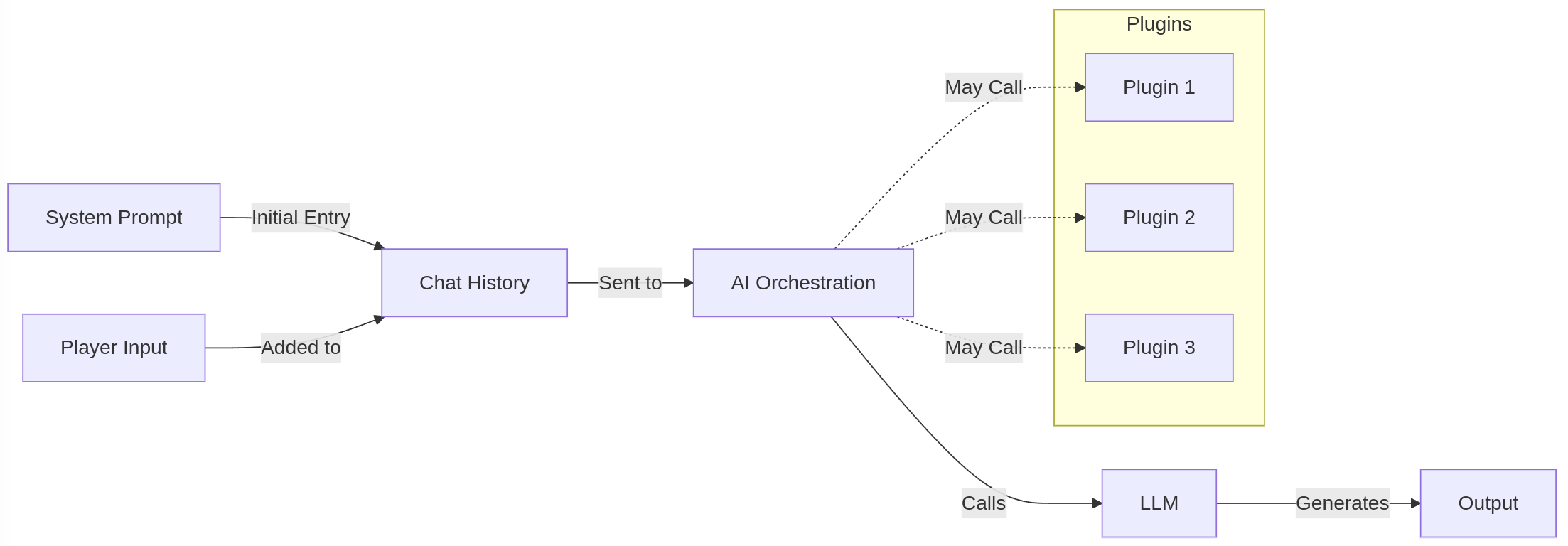

When a message from the user comes in, it and the session history up to this point are sent to the AI Orchestration system. The system then looks at the various external sources of information it has, summarizes them, and provides all of this information to a LLM. The LLM may choose to generate a response, or it may request that the orchestration engine invoke one or more of its functions in order to generate a response as shown here:

By incorporating additional capabilities into an LLM, the LLM is able to consult external data in order to ground itself further, clarify points of confusion, or look up old information. This ultimately leads to a richer and more extensible play experience.

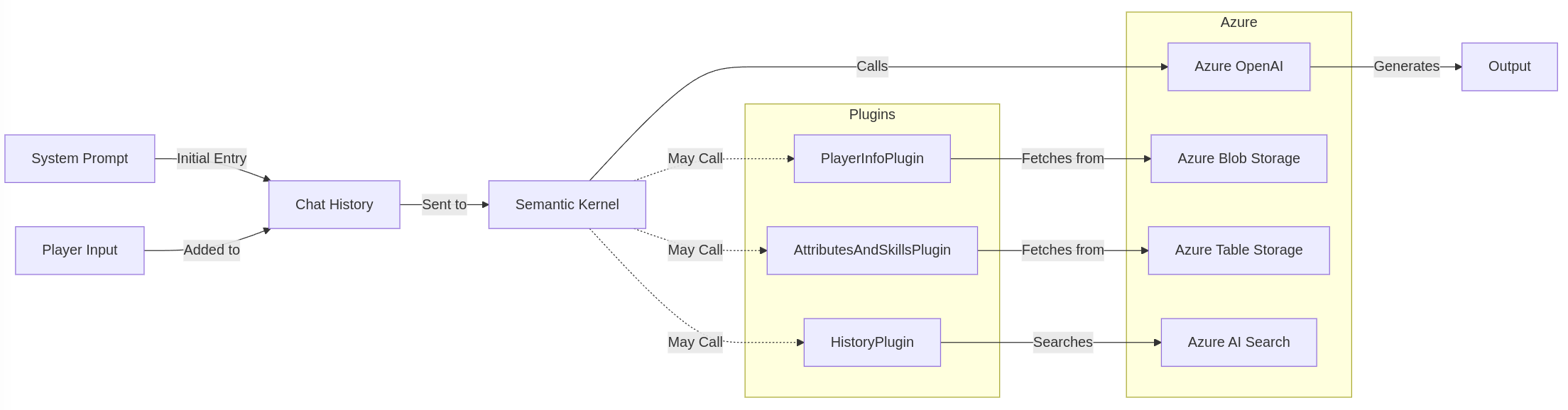

My current solution involves using Microsoft Semantic Kernel (SK) as the AI Orchestration solution, C# as my programming language, GPT-4o-mini on Azure with Azure OpenAI as my large language model, and various data sources in Azure Table Storage, Azure Blob Storage, and Azure AI Search.

Such a system is illustrated below:

By incorporating these external data sources, a digital dungeon master tends to behave in a manner closer to what a human dungeon master might and has fewer issues during play sessions, though I do find myself still politely correcting my dungeon master once or twice a session.

It’s important to note here that I do not view this digital dungeon master prototype as completed, but AI orchestration has given me enough value out of it to have a number of successful play sessions - and opportunities for further refinement of the engine.

My current implementation of this system is evolving rapidly and involves a number of cloud data sources I’m maintaining and cannot share, but throughout the rest of this article we’ll look at a basic version of this system focused on local data sources and using Microsoft Semantic Kernel and C# code to implement an AI Orchestration system.

This early variant will focus on local data sources instead of cloud data, but will still use a LLM hosted on Azure OpenAI. The code I’m basing this article on can be found in the SKRPGArticle branch of my BasementsAndBasilisks GitHub Repository, though note some additional configuration and setup will be required to run it locally. Refer to the project’s README.md for specifics.

Building a Dungeon Master Kernel with Semantic Kernel

Microsoft Semantic Kernel works by offering a central Kernel that orchestrates all actions in response to user inputs. Semantic Kernel is built to be configurable and extensible and can integrate into things like planners, multiple agent systems, and LLMs hosted on a variety of services (including locally).

I’ll be using version 1.30.0 of the Microsoft.SemanticKernel NuGet package for this code, so keep in mind that there may be some differences if you use a later version of that package.

Setting up the Kernel

In order to work with Semantic Kernel, we need to first set up our Kernel once. After this, we can invoke it as many times as we want to get responses to requests.

I usually choose to create a single class as a wrapper around everything Semantic Kernel, and that’s what I chose to do for my digital dungeon master project:

using Microsoft.SemanticKernel;

using Microsoft.SemanticKernel.ChatCompletion;

using Microsoft.SemanticKernel.Connectors.OpenAI;

public class BasiliskKernel

{

private readonly Kernel _kernel;

private readonly IChatCompletionService _chat;

private readonly ChatHistory _history;

// Some logging details are omitted from this example

public BasiliskKernel(IServiceProvider services,

string openAiDeploymentName,

string openAiEndpoint,

string openAiApiKey)

{

IKernelBuilder builder = Kernel.CreateBuilder();

builder.AddAzureOpenAIChatCompletion(openAiDeploymentName,

openAiEndpoint,

openAiApiKey);

_kernel = builder.Build();

// Set up services

_chat = _kernel.GetRequiredService<IChatCompletionService>();

_history = new ChatHistory();

_history.AddSystemMessage("""

You are a dungeon master directing play of a game called Basements and Basilisks.

The user represents the only player in the game. Let the player make their own decisions,

ask for skill checks and saving rolls when needed, and call functions to get your responses as needed.

Feel free to use markdown in your responses, but avoid lists.

Ask the player what they'd like to do, but avoid railroading them or nudging them too much.

""");

// Plugin registration omitted for now

}

// Other members hidden for now

}

This code sets up the _kernel field to store the Kernel we configure with Semantic Kernel using the IKernelBuilder.

While there are many things you can add with the kernel builder such as memory, logging, and additional connections, this code only connects the large language model for chat completions, and specifically connects to an Azure OpenAI resource.

Note: When using LLMs, make sure to use something later that GPT-35-Turbo, the original ChatGPT. You’ll encounter mismatches with Semantic Kernel if the LLM is too old to support tool calls, as I found out in early experimentation.

Once the kernel is ready to go, call the Build() method to get a reference to it. I also take the opportunity to get the IChatCompletionService out of the kernel and instantiate a shared ChatHistory instance with our system’s main prompt.

Chatting with the Kernel

Now that we’ve seen how the Kernel is initialized, let’s see how I’m handling chat messages:

public class BasiliskKernel

{

// Other members hidden...

public async Task<string> ChatAsync(string message)

{

try

{

_history.AddUserMessage(message);

OpenAIPromptExecutionSettings executionSettings = new OpenAIPromptExecutionSettings()

{

FunctionChoiceBehavior = FunctionChoiceBehavior.Auto()

};

ChatMessageContent result = await _chat.GetChatMessageContentAsync(_history, executionSettings, _kernel);

_history.AddAssistantMessage(result.Content);

// I actually wrap this into a more extensible object, but I'm simplifying this to a string for this article

return result.Content ?? "I'm afraid I can't respond to that right now";

}

catch (HttpOperationException ex)

{

if (ex.InnerException is ClientResultException && ex.Message.Contains("content management", StringComparison.OrdinalIgnoreCase))

{

return "I'm afraid that message is a bit too spicy for what I'm allowed to process. Can you try something else?";

}

return $"Could not handle your request: {ex.Message}";

}

}

}

The first thing we do is add the message to our ChatHistory as a user message so the Kernel can see it and respond to it.

Next, we define some execution settings that tell the Kernel it’s allowed to automatically call functions we provide (more on this later).

After this, we call GetChatMessageContentAsync on our IChatCompletionService which in this case is Azure OpenAI’s GPT-4o-mini LLM. We pass the method our current chat history (including the latest user message), our settings, and a reference to the kernel which should connect it to additional resources.

This method call will return with a ChatMessageContent result which will include the chat response in the Content property.

We then take this message and add it back into the history as the assistant’s response to give ourselves context for the next cycle of interaction.

You might also note that I’m catching HttpOperationException here and looking at the response to see if it’s a ClientResultException containing the phrase “content management”. This is to identify cases where Azure Content Moderation has flagged the input or output of a message to an Azure OpenAI LLM as in violation of one of its filters for content.

In reality, this wound up being an important thing to catch. I found myself hunting wild animals called “feathered hares” in one game session in my survival-oriented campaign. After some creative scheming, I caught a creature in a magical trap. My next step was to “put an end” to the creature, cook it, and eat, but Azure Content Moderation didn’t like my actions when I described what I wanted to do with my dagger. My input in this case triggered the violence threshhold of the LLM.

Thankfully, this was not real violence and this setting could be relaxed on Azure to allow for tame forms of violence like you’d see in a game like this, but I found the story humorous enough to share here.

Up to this point, I’ve shown you how to create a Kernel and invoke it, but I’ve not shown you the most magical part: creating your own plugin functions to give the AI Orchestration system something to orchestrate.

Defining Plugins for our AI Orchestration System

Semantic Kernel allows you to define functions for the kernel to invoke in order to adequately respond to user requests.

By providing custom functions we can add in additional capabilities for the system to invoke in order to fully understand the game world and the context of the player’s command.

These functions can come from a variety of sources including additional text prompts for large language models, but the most interesting and relevant one for this digital dungeon master comes through providing C# classes that offer up public methods that can be directly invoked by the kernel.

The SKRPGArticle branch of my digital dungeon simulator project defines the following plugins:

AttributesPlugin- listing the core attributes associated with any character and what they representClassesPlugin- lists the major character classes that can be chosen within the gameGameInfoPlugin- contains additional context about the game world and the desired tone and style of the storytellingPlayerPlugin- contains background information on the player character and the equipment and skills they posessQuestionAnsweringPlugin- a utility plugin designed to give yes, no, or maybe answers to requests for clarification from the dungeon masterRacesPlugin- lists the major character races that people play as such as human, orc, or halflingSessionHistoryPlugin- retrieves the recap of the last play sessionSkillsPlugin- lists the major skills that are available to players for skill checks, what they do, and what attribute they’re associated with

In this branch all of these plugins are locally defined and use hard-coded data. However, in more recent branches, the system has evolved to have fewer hard-coded plugins and more data that’s dynamically retrievable from blob or table storage on Azure. This makes the core engine more flexible in that the dungeon master can run a fantasy game in one setting and ruleset and a star-spanning space opera in another setting and with its own distinct rules.

Building a Plugin

Let’s look at how a simple plugin works, taking the AttributesPlugin as an example:

[Description("Provides information on attributes and stats supported by the game system")]

public class AttributesPlugin

{

[KernelFunction, Description("Gets a list of attributes in the game and their uses.")]

public IEnumerable<AttributeSummary> GetAttributes()

{

return new List<AttributeSummary>

{

new() { Name = "Strength", Description = "The ability to exert physical force and perform feats of strength" },

new() { Name = "Dexterity", Description = "The ability to perform feats of agility, balance, and precision" },

new() { Name = "Constitution", Description = "The ability to endure physical hardship, resist disease, and recover from injury" },

new() { Name = "Intelligence", Description = "The ability to reason, recall information, and solve problems" },

new() { Name = "Wisdom", Description = "The ability to perceive the world around you, understand it, and make good decisions" },

new() { Name = "Charisma", Description = "The ability to influence others, lead, and inspire" }

};

}

public class AttributeSummary {

public required string Name { get; set; }

public required string Description { get; set; }

}

}

This is a simple C# class with a single public method and a small inner class that defines the result of the public GetAttributes method.

The GetAttributes method creates and returns a collection of hard-coded attributes and has annotations that tell Semantic Kernel what the method does and what it should expect returned when the method is invoked. Note that we also have a Description annotation on the plugin class itself for the same reason.

This particular method returns a sequence of AttributeSummary entries, but it could just as easily have returned a string paragraph describing all six attributes. Semantic Kernel is able to interpret the JSON serialized version of these AttributeSummary objects, but it would also have been fine with a simple string or a primitive value such as an integer or a boolean (though those don’t make sense in this particular example).

Semantic Kernel would also have been okay if GetAttributes was async and returned a Task<IEnumerable<AttributeSummary>>. In general, I have found recent versions of Semantic Kernel to be very flexible and accomodating with the data types you return.

Note: Plugins can also take in parameters, which the kernel will try to accurately provide. In my experience, this can be hit or miss with the kernel sometimes providing a string that’s very different than what you expect, though using Description on each paramater can help. If you need a plugin method that takes in one or more parameters, I recommend you return strings indicating what went wrong if you suspect the parameter you received was invalid.

We have many options with Semantic Kernel plugin definitions and that these plugins are simple to define using C# code - they just require public methods with some additional documentation.

Now that we’ve covered defining plugins, let’s see how they can be integrated into your Kernel object.

Registering a Plugin

Plugins can be registered in Semantic Kernel by calling the AddFromType method as shown here:

_kernel.Plugins.AddFromType<AttributesPlugin>(pluginName: "AttributesPlugin");

This is typically done right after you build your kernel using the IKernelBuilder as we discussed earlier.

Semantic Kernel will use the Kernel object’s Services property as a dependency injection container and will attempt to instantiate your plugin, providing it whatever constructor parameters it has requested.

The plugin name you provide is helpful information for Semantic Kernel to use in determining which plugin to invoke. Do not include spaces in your plugin name.

In more complex scenarios, you may want or need to cover instantiation yourself. For that case, there’s the AddFromObject method that works like this:

AttributesPlugin myPlugin = new();

kernel.Plugins.AddFromObject(myPlugin, pluginName: "AttributesPlugin");

This is a simple example and there’s no reason you wouldn’t use AddFromType in this case, but if your object was more complicated to construct, AddFromObject can be helpful.

To demonstrate this, let’s look at an extension method I made in BasiliskExtensions.cs in this project that detects all classes in the assembly that are decorated with a BasiliskPluginAttribute, instantiates each one, and then registers them as a plugin:

public static void RegisterBasiliskPlugins(this Kernel kernel, IServiceProvider services)

{

// Find all Types that have the BasiliskPluginAttribute, instantiate them using services, and register them as plugins

foreach (Type type in Assembly.GetExecutingAssembly().GetTypes())

{

BasiliskPluginAttribute? attribute = type.GetCustomAttribute<BasiliskPluginAttribute>();

if (attribute != null)

{

kernel.Plugins.AddFromObject(services.GetRequiredService(type), attribute.PluginName, services);

}

}

}

This code uses reflection to do its analysis and also retrieves the plugin from the IServiceProvider it was registered in earlier. Note that the service provider is also provided to the plugin here in the final services parameter.

That service registration is done via a separate extension method:

public static void RegisterBasiliskPlugins(this ServiceCollection services)

{

// Find all Types that have the BasiliskPluginAttribute and register them as services

foreach (Type type in Assembly.GetExecutingAssembly().GetTypes())

{

if (type.GetCustomAttribute<BasiliskPluginAttribute>() != null)

{

services.AddScoped(type);

}

}

}

Finally, for full traceability, the BasiliskPluginAttribute is defined as follows:

[AttributeUsage(AttributeTargets.Class)]

public class BasiliskPluginAttribute : Attribute

{

public required string PluginName { get; init; }

}

You won’t frequently need to get complex enough that you’ll have a reflection-based registration scenario like this, but in this particular project I found myself frequently adding and removing plugins and I wanted to reduce the risk of defining a plugin and failing to properly register it.

Now that we’ve seen how we instantiate and interact with the Kernel and how we provide it plugins, let’s look at a sample request that handles every aspect of this.

A Sample Session

To demo this in action, I’ll launch the game, which creates the Kernel and attaches all plugins then sends this message to the plugin on behalf of the player:

Hello, Dungeon Master! Please greet me with a recap of our last session and ask me what my goals are for this session. Once you have these, ask me what I’d like to do.

To fulfil this task, Semantic Kernel calls the GetLastSessionRecap method on the SessionHistoryPlugin, inspects the resulting text, and then sends me the following message:

DM: Greetings, adventurer! Last session, your character Norrick, the human artificer, found himself stranded in an alien wilderness after a teleportation mishap. He navigated through a dense forest and discovered a decrepit tower filled with hints of magical experiments. Inside, he battled a Scraggle, fortified the door using his magic, and uncovered a weathered journal with alien script, as well as a map marking locations of interest. After tending to his basic needs, you gathered herbs, encountered feathered creatures, successfully hunted one, and cooked it over a campfire. You even constructed a makeshift sleeping area from the tower’s remnants and prepared for the dawn of a new adventure. What goals do you have for this session? Once you’ve shared those, let me know what you’d like to do!

I respond with the following:

Player: I’d like to do some exploring. What skills and attributes do you think will be most helpful?

On receiving this, the Kernel invokes the GetAttributes plugin method as well as the GetSkills plugin method and then combines the results in a single response:

DM: Exploring the wilderness and the tower can be an exciting endeavor! Here are some skills and attributes that might be particularly helpful for you during your exploration:

Attributes:

- Wisdom: Crucial for understanding your surroundings and making good decisions.

- Intelligence: Useful for reasoning and recalling information.

- Dexterity: Beneficial for performing agile movements and avoiding danger.

Skills:

- Perception (Wisdom): To spot hidden creatures, traps, or important details.

- Investigation (Intelligence): To examine items or areas more thoroughly.

- Survival (Wisdom): To track wildlife, find food, or navigate through challenging terrain.

- Stealth (Dexterity): If you want to move quietly or avoid detection.

With this information in mind, what specific area or aspect would you like to explore? Would you like to investigate the tower, delve deeper into the forest, follow the map you found, or something else?

Note that the DM has chosen to use Markdown bolding and lists in this response as I told it Markdown was acceptable formatting.

To me, the DM’s response is very accurate - though I might have also included the Nature skill in the results.

By providing the system with additional ways of getting grounded and accurate information about the campaign and the game world I’m able to help anchor it more in the realm of the story I’m trying to tell and less in the vastness of its training data, which also included game transcripts from games in other game systems or in other settings.

Exploring the Results

So, how good is this digital dungeon master? In short, good enough to write about, but not good enough to be done with the project or fully share it in an interactive manner.

Let’s talk about where it’s strong, where it’s weak, and how I plan on leveraging Semantic Kernel and Azure to help address its shortcomings.

The Good

The digital dungeon master is good enough to provide entertaining solo play sessions for a sustained amount of time - provided you’re entertained by the same types of things that I’m entertained by.

I’ve seen a number of videos and articles on the different types of gamers and what motivates one player may not motivate another. I’m personally motivated by exploring a setting, exploring a character, exploring mechanics, and building and developing things over time.

To me, the idea of a sandbox where I can explore, quest, and improve a character and environment over time is really interesting. I found myself getting lost in various play sessions working on using elaborate combinations of magic, objects, and my environment to try to fix broken doors, secure a tower, create medicine, translate writing, and even trying to catch fast and agile alien creatures. Being asked for die rolls in appropraite scenarios and seeing myself roll a series of 2’s and 3’s and needing to try something different was really interesting.

I also found the more that I gave the dungeon master, the more I got out of the experience. In other words, instead of saying “I’ll go south into the woods” I’d type “I want to enter the forest to the south. I’ll go very slow and be cautious because I don’t know what’s here”. The dungeon master would pick up on my caution and lean into that, prompting me about various dangers to avoid and roll for and giving me interesting decisions to make.

Over the course of four play sessions I played a human artificer who had teleported to an alien world on accident. I was able to explore a small region of alien wilderness and find interconnections between the flora, fauna, and ruins of an old tower that all cohered down to an inciting incident when a circle of stones gave me a vision of a distant library of infinite knowledge that could potentially help me learn what I needed to learn in order to escape the game world and go back to my character’s home.

While a lot of the flora and fauna reminded me of things I’d seen in the movie Avatar, I did find the setting interesting and the game even teased me with the idea that thoughts and emotions had a tangible effect on reality in this world, which was exactly the sort of sci-fi hook that’s up my alley.

Additionally, the game gave me a really good initial encounter as a solo level 1 character with an alien creature it called a “Scraggle” that included level-appropriate stats ready to be dropped into an encounter.

In fact, I was able to take the description of the room I was in and generate a top-down graphic for the room and plug both it and the Scraggle into D&D Beyond and run that as a combat encounter.

Remember: I’m not looking for my DM to administer all of the game rules or run combat for me; I’m looking for a system to provide an interesting narrative experience and trigger things like skill checks and encounters where appropriate. I view the player as almost a joint dungeon master in that they steer the direction of the narrative by sharing what they’re feeling and wanting to get out of a play session.

Now that we’ve covered what works well, let’s talk about what could be better.

The Bad

While the DM was generally good within the sessions of play, it did have gaps between the various sessions - particularly as I backtracked and re-entered areas described in previous sessions. This is because the full transcript of prior sessions is not yet available to my digital dungeon master, so it effectively generated a new description of the area which might not match the original description.

In these cases I was able to correct the DM and say “Hey, previously you described this room like this” and it’d agree with me and the story would pick up from there, but this did break the immersion.

I have a two-part solution for this problem that I plan on implementing in the future.

First, I want to upload my game transcripts to Azure Blob Storage where an Azure AI Search instance can find the blob and add it to an index. Then I can use Azure OpenAI embedding generation to compare the events of a game to prior sessions through semantic memory and Semantic Kernel. This will allow the system to have a broader memory of the player’s actions and the bots own responses.

Secondly, I plan on implementing a location plugin. When the game enters a new area, I’d like it to request information from this location service. If the location is known, the old summary is returned. If a location is not known, I can use a series of random tables to determine key pieces of information about this area, then have the LLM generate a description for the area and store that description in the location service. In this way, location information can be persistent across sessions, improving the system’s grounding.

My final problem is that the game is almost too sandbox-oriented at times. The game did nothing proactively and the only threats I encountered where when I told the system I was trying to be cautious or look for danger, or when I deliberately sought out a creature den I found marked on a map. In my four sessions of play, I encountered one level 1 enemy that was appropriate for my character as a combat encounter and I sought out the creature den, resulting in an extremely challenging encounter with two “Duskrunners” who would have killed me nearly instantly if I hadn’t fled.

The game is working okay at a session level, but it needs a larger narrative focus that can span multiple play sessions and something to inject opportunities for random encounters and other interesting events. This dovetails nicely with where I want to take the system next.

Next Steps

As I’ve mentioned in this article, I’ve used Semantic Kernel and its plugins to good effect for a basic digital dungeon master. I have a good system that is very effective for exploration-oriented players like myself, but it has some critical gaps in its long-term memory and in a lack of a narrative drive.

I believe that the addition of semantic memory through Azure AI Search and a dedicated location service as described earlier will help with the “groundedness” of the system, but I need something more to give it a larger narrative feel, drive, and challenge.

What I think I’m ultimately missing is a more agentic approach where I split my Semantic Kernel into multiple separate agents, each with their own plugins and prompts.

In such a model I could have a storyteller agent who is directing the dungeon master to spice things up, add challenge and setback, and throw in random encounters from time to time. Such a storyteller reminds me a little of the AI Director feature first introduced in the game Left 4 Dead.

Other agents in this model could involve the dungeon master who is responsible for generating a reply to the user, a rules lawyer who checks for relevant skill checks or game rules to cite, and an editor who makes sure the generated response to the player is of satisfactory quality, doesn’t take away player agency, and is formatted correctly.

Semantic Kernel features an experimental Agents Framework that may work well for such an experiment.

Finally, I have designed this system in such a way that it can work in a variety of game systems and game settings. My initial forray was limited to Dungeons and Dragons 5th Edition in a sci-fi / fantasy setting, but I want to run experiments using science fiction, horror, and even just modern-day exploration settings to see how the system handles different scenarios, player characters, and rulesets.

Regardless of how much I can improve this project beyond what I’ve already implemented, I’ve found that LLMs and AI Orchestration via Semantic Kernel and Azure OpenAI have been very effective in producing an interesting play experience. If you’re interested in this or have additional ideas for me to consider, leave a comment or get in touch.

This article is part of the C# Advent 2024 community series. Check out the rest of the articles at CSAdvent.Christmas