Azure Machine Learning Compute Resources

Making sense of compute instances vs compute clusters and more in Azure Machine Learning

Azure Machine Learning is a powerful suite of tools to manage datasets, train, version, and evaluate machine learning models, and deploy those models to endpoints where others can use them. But many of these tasks take raw computing power, so it is important to understand the many options we have available to us in Azure Machine Learning Studio.

Aspects of this content is also available in video form on YouTube

Microsoft gives us several different flavors of compute at the moment:

- Compute instances

- Compute clusters

- Inference clusters

- Attached compute

- Local compute

- Azure Container Instance

- Azure Kubernetes Service

Each one of these compute resources fills a different need and has different capabilities and cost profiles. Let’s explore each one in turn.

Compute Instances

Compute instances are a pre-configured compute environment primarily intended for use in running applications in the cloud such as Jupyter Notebooks, JupyterLab, VS Code, or R Studio.

Compute instances can also be used as a compute target when running machine learning training operations, however you are typically going to have a better experience running these experiments on a compute cluster instead.

Compute instances are created from the compute instance tab in the compute section of Azure Machine Learning Studio, but can also be created in code via the Azure ML Python SDK.

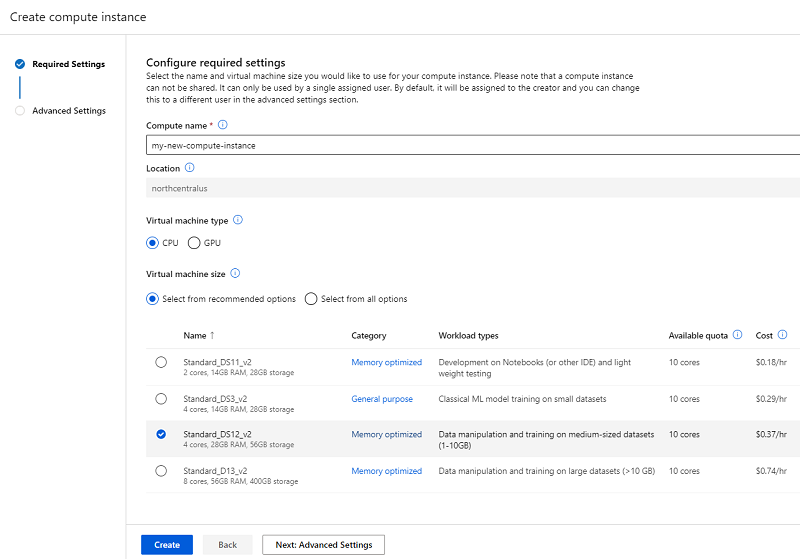

Compute instances can be CPU or GPU based and Microsoft offers a variety of resource profiles to meet your computing and storage needs at different price levels.

I typically do not significantly benefit from having a more powerful CPU resource or going to a GPU when running Jupyter notebooks as that work is highly iterative and the largest blocker to progress is my own thinking speed, but if you need to do some serious number crunching on a compute instance you may benefit from a larger profile.

You only pay for a compute instance when it is active (though you do pay storage costs for any notebooks or other resources used on it), so it is strongly recommended to manually deactivate compute resources when you are done with them.

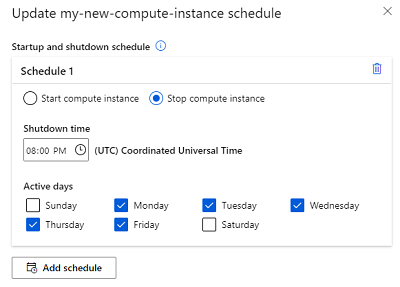

You can also set schedules to automatically turn your compute instances on or off. This is particularly handy if you are worried about forgetting to deactivate a compute instance or want them to always be active and available for your team within a certain time range.

Billing Tip: When working with compute instances, make sure to have a scheduled deactivation time each day of the week to avoid excessive costs.

Compute Clusters

Compute clusters are the number one computing resource I care about in Azure Machine Learning. Compute clusters automatically scale up and down based on the work available and can be used to quickly perform large machine learning tasks including automated ML runs.

Unlike compute instances, compute clusters cannot be used to power Jupyter Notebooks, however they are a better choice for model training.

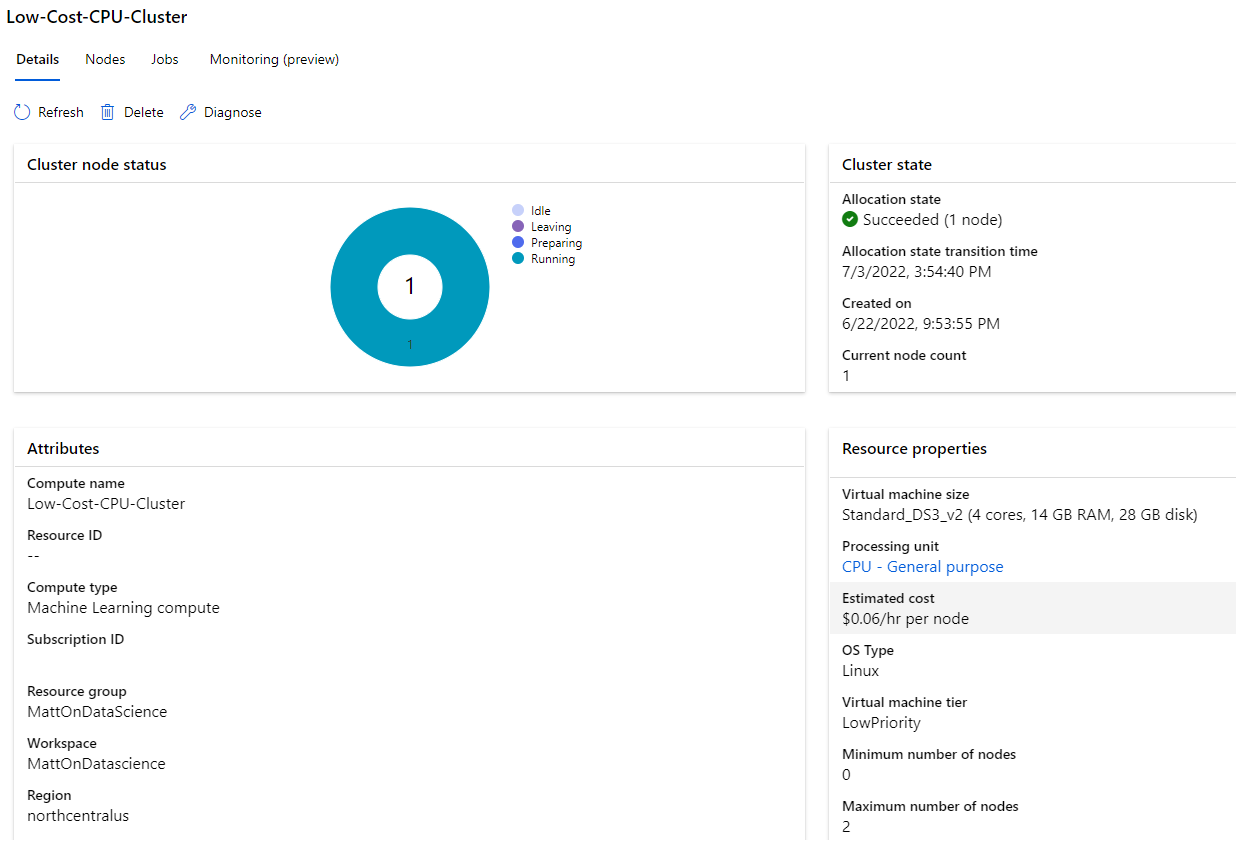

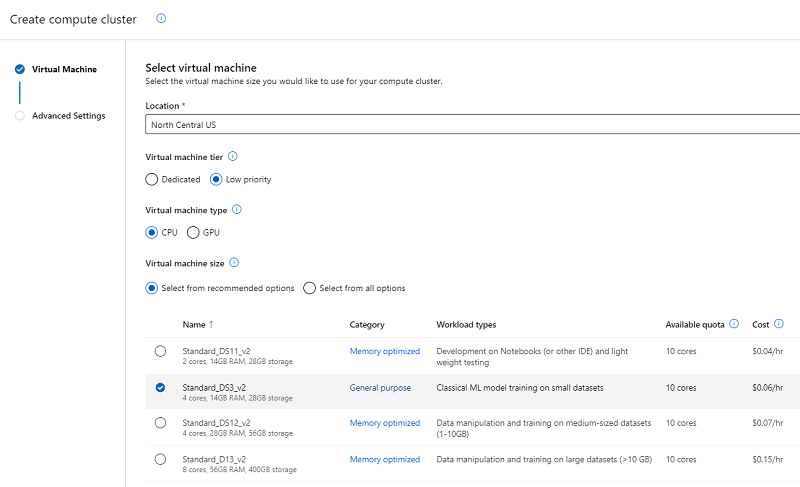

When you provision a cluster, you specify whether it is a CPU or GPU, select the virtual machine size per cluster node, and choose whether you want a dedicated virtual machine or a low priority virtual machine.

Low priority virtual machines cost significantly less per hour, but Azure may pre-empt them to run tasks for dedicated jobs from other users. If your machine learning runs are not urgent or you primarily do your model training in off-peak usage hours of the day, low priority might be worth taking a look at.

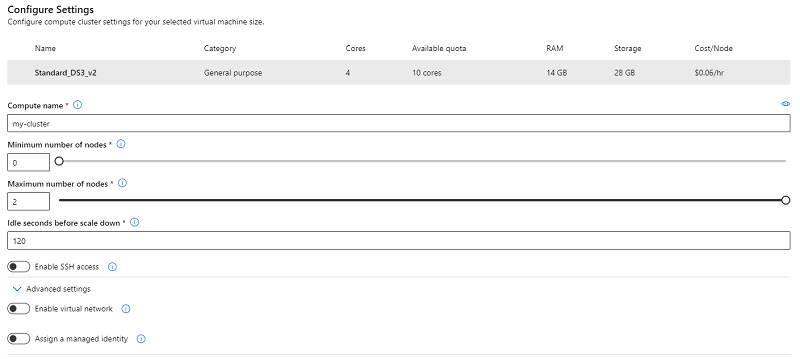

When you create a compute cluster you also need to specify the minimum and maximum number of active nodes for the cluster as shown below:

The maximum possible nodes will vary based on the tier of compute resource you chose and the available quota of compute resources for your azure account (which can be increased by talking to support).

Billing Tip: Because Azure bills you for compute clusters only when nodes are on, I strongly recommend you set your minimum number of nodes to 0 so that your cluster goes offline when not in use and your bill does not bill you for cluster nodes that are in idle state waiting for tasks.

Inference Clusters

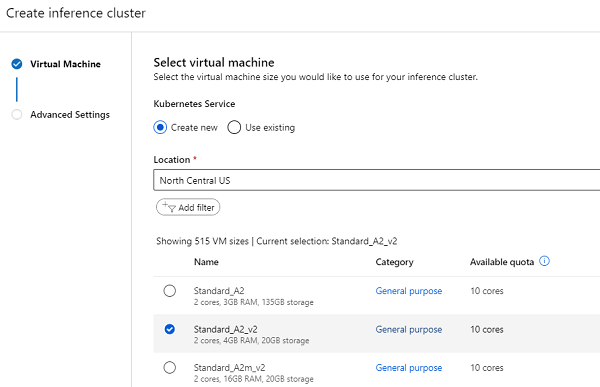

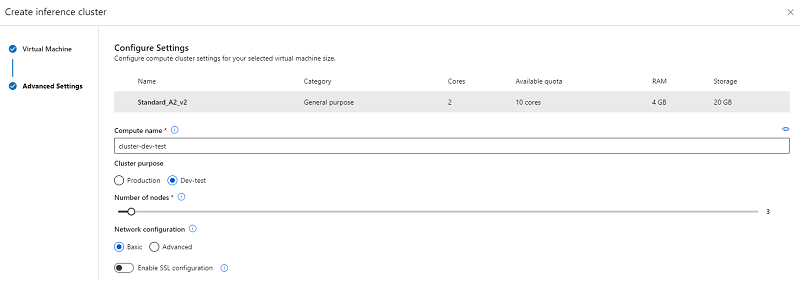

An inference cluster is a Kubernetes cluster used for pipeline-based inference scenarios.

Like other Azure compute resources, inference clusters are provisioned from the compute page of Azure Machine Learning Studio on the inference clusters tab.

From there, you specify the tier and location of the resource as well as the number of nodes and if the cluster is intended for dev/test purposes or production purposes

These clusters are much less frequently used than other resources in Azure Machine Learning Studio and I would recommend you avoid them unless you have an explicit need for them.

Attached Compute

Attached compute lets you connect existing compute resources elsewhere in Azure to Azure Machine Learning Studio. This allows you to use your existing virtual machines, Databricks, or HD Insights clusters to Azure to use as a compute target when training machine learning models.

Attached Compute can make a lot of sense if you already have virtual machines or other resources provisioned in Azure that are likely to have idle capacity for model training.

However, if you do not already have these resources available it will be far more economical to use an Azure Machine Learning compute cluster instead.

Local Compute

On the topic of attached compute, I should point out that local compute is an option for some Azure Machine Learning tasks.

If you have a specific machine learning script you wish to execute and only wish to store metrics, datasets, and trained models on Azure, you can use the Azure ML Python SDK to train machine learning resources on your own compute resources.

In a similar fashion, it is entirely possible to run Jupyter Notebooks, JupyterLab, R Studio, and VS Code on your own compute resources outside of Azure than using the notebooks or compute instance features on Azure Machine Learning Studio.

However, there are plenty of tasks in Azure Machine Learning that cannot currently be executed with local compute.

Azure Container Instance

Azure Container Instances (or ACI) are a way of deploying a trained machine learning model as a containerized endpoint.

Using ACI for deployment lets you package up that trained model easily for low-volume purposes as a deployed web service endpoint.

Azure Container Instances support key-based authentication or no authentication and are fine for most development, testing, and low volume scenarios.

Keep in mind that Azure Container Instances take time to create and deploy (around 20 minutes) and will always be running so they are more expensive than other compute resources which may be scaled up and down on demand.

Azure Kubernetes Service

Azure Kubernetes Service (or AKS) lets you deploy a trained machine learning model to a Kubernetes service consisting of a scalable number of nodes.

This allows you to have a more dynamically scalable machine learning endpoint for high volume endpoints that need to support many requests at once.

Additionally, Azure Kubernetes Service lets you use token-based authentication that automatically refreshes over time in case a token is compromised. This ultimately provides better security than the key-based approach offered by Azure Container Instances.

Which Compute Resource to Choose When?

So, which compute resources are right for you?

My recommendation is to use compute clusters wherever possible in Azure Machine Learning and to make sure you set a minimum size of 0 nodes for the cluster to keep costs under control.

For deployment, although Azure Kubernetes Service is intended for production workflows and Azure Container Instances are more intended for development and testing, you may want to try using Azure Container Instances for cost control purposes if you expect to get infrequent requests.

If costs are critical, you could use local compute for model training where possible and take your trained model outside of Azure if you find a more cost-effective way of hosting and deploying the trained model.

If you find yourself using Notebooks inside of Azure Machine Learning Studio you will need to work with a compute instance as well, so make sure you schedule at least one time each day of the week where the compute instance should automatically shut off in order to avoid billing surprises.

My typical approach is to use compute clusters and strategic use of Azure Container Instances for model deployment.

Let me know what works for you and your team as you train and deploy your machine learning models!