How to Generate Text with OpenAI, GPT-3, and Python

Using large language model transformers to create generative text responses

In this article we’ll take a look at how you can use the Generalized Pre-trained Transformers v3 API (GPT-3) from OpenAI to generate text content from a string prompt using Python code.

If you’re familiar with ChatGPT, the GPT-3 API works in a similar manner as that application: you give it a piece of text and it gives you back a piece of text in response. This is because ChatGPT is related to GPT-3, but presents its output in a chat application instead of via direct API calls.

If you ever thought it’d be cool to integrate transformer-based applications into your own code, GPT-3 allows you to do that via its Python API.

In this article we’ll explore how to work with GPT-3 from Python code to generate content from your own prompts - and how much that will cost you.

Note: This article is heavily inspired by part of Scott Showalter’s excellent talk on building a personal assistant at CodeMash 2023 and I owe him credit for showing me how simple it was to call the OpenAI API from Python

Why use GPT-3 when ChatGPT is Available?

Before we go deeper, I should state that GPT-3 is older that ChatGPT and is a precursor to that technology. Given these things, a natural question that arises is “why would I use the old way of doing things?”

The answer to this is fairly simple: ChatGPT is built as an interactive chat application that directly faces the user. GPT-3, on the other hand, is a full API that can be given whatever prompts you want.

For example, if you needed to quickly draft an E-Mail to a customer for review and revision, you could give GPT-3 a prompt of “Generate a polite response to this customer question (customer question here) that gives them a high level overview of the topic”

GPT-3 would then give you back the direct text it generated and your support team could make modifications to that text and then send it on.

This way, GPT-3 allows you to save time writing responses, but still gives you editorial control to fact-check its outputs and avoid the types of confusion you might see from interactions with ChatGPT, for example.

GPT-3 is useful for any circumstance where you want to be able to generate text given a specific prompt and then review it for potential use later on.

Getting a GPT-3 API Key

Before you can use GPT-3, you need to create an account and get an API key from OpenAI.

Creating an account is fairly simple and you may have even done so already if you’ve interacted with ChatGPT.

First, go to the Log in page and either log in using your existing account or Google or Microsoft accounts.

If you do not have an account yet, you can click the Sign up link to register.

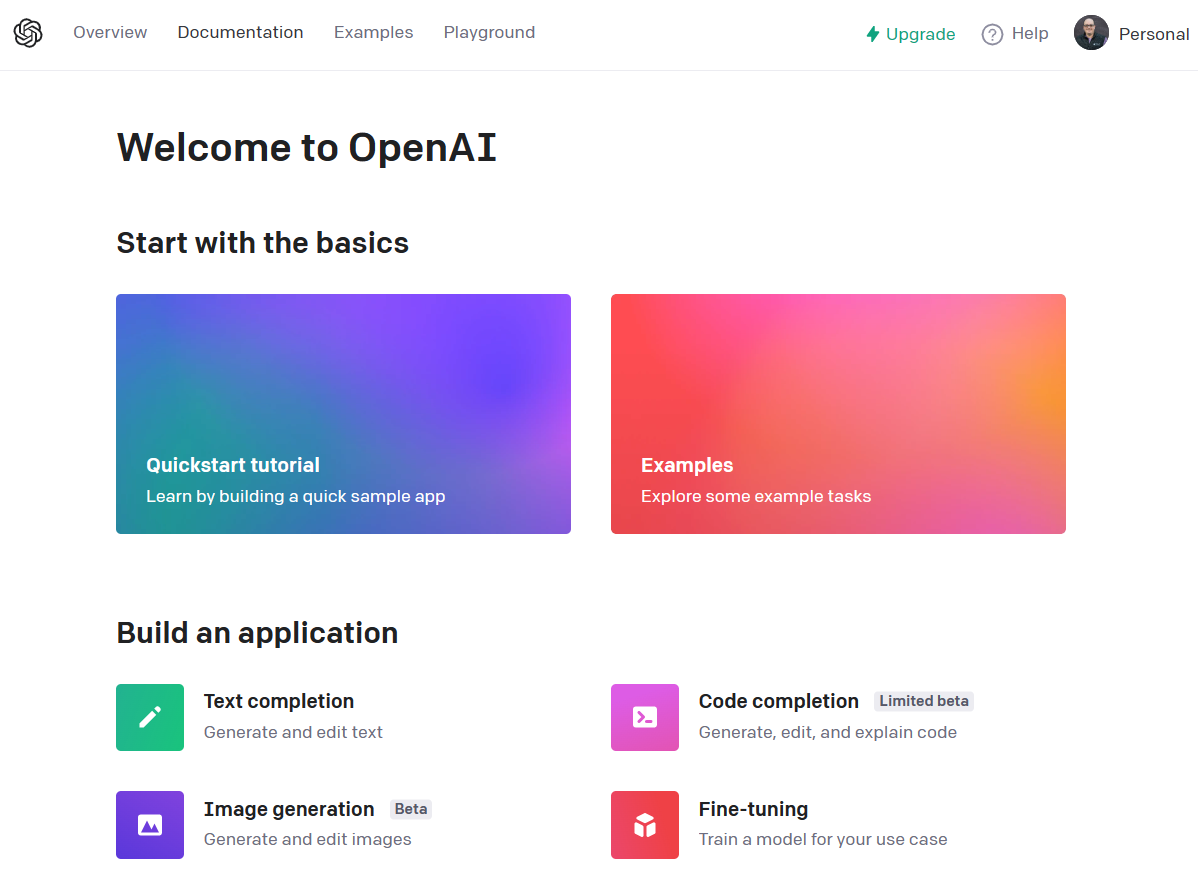

Once you’ve logged in, you should see a screen like the following:

While there are a number of interesting links to documentation and examples, what we care about is getting an API key that we can use in Python code.

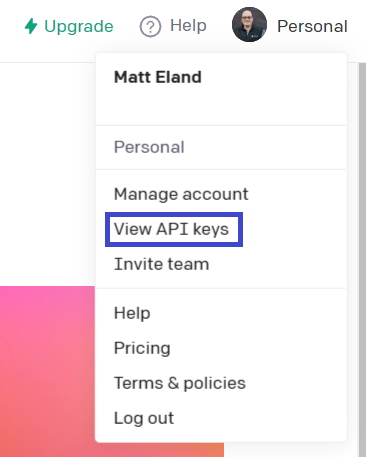

To get this API key, click on your picture and organization name in the upper right and then select View API Keys.

From here, click + Create new secret key to generate a new secret key. This key will only be visible once and you will not be able to recover it, so copy it down somewhere safe.

Once you have the API Key, it’s time to move into Python code.

Importing OpenAI and Specifying your API Key

For the remainder of this article, I’ll be giving you bits and pieces of code that might go into a .py file.

If you’re following along with these steps, you might choose to call this gpt3.py and use some edition of PyCharm.

The first thing we’ll need to do is install the OpenAI dependency. You can use PyCharm’s package manager pane to do this, but a more universal way would be to use pip or similar to install the openai dependency as follows:

pip install openai

Once that’s complete, add a pair of lines to import OpenAI and set the API Key to the one you got earlier:

import openai as ai

ai.api_key = 'sk-somekeygoeshere' # replace with your key from earlier

This code should work, however it is a very bad practice to have a key directly embedded into your file for a variety of reasons:

Tying a file to a specific key reduces the flexibility you have to use the same code in different contexts later on.

Putting access tokens in code is a very bad move if you code ever leaves your organization or even if it reaches a team or individual who should not have access to that resource.

It is a particularly bad idea to push code to GitHub or other public code repositories where it might be spotted by others, including automated bots that search for keys in public code.

Instead, a better practice would be to declare the API Key as an environment variable and then use os to get that key out by its name:

import os

import openai as ai

# Get the key from an environment variable on the machine it is running on

ai.api_key = os.environ.get("OPENAI_API_KEY")

Once you have the key set into OpenAI, you’re ready to start generating predictions.

Creating a Function to Generate a Prediction

Below I have a short function that makes a call out to OpenAI’s completion API to generate a series of text tokens from a given prompt:

def generate_gpt3_response(user_text, print_output=False):

"""

Query OpenAI GPT-3 for the specific key and get back a response

:type user_text: str the user's text to query for

:type print_output: boolean whether or not to print the raw output JSON

"""

completions = ai.Completion.create(

engine='text-davinci-003', # Determines the quality, speed, and cost.

temperature=0.5, # Level of creativity in the response

prompt=user_text, # What the user typed in

max_tokens=100, # Maximum tokens in the prompt AND response

n=1, # The number of completions to generate

stop=None, # An optional setting to control response generation

)

# Displaying the output can be helpful if things go wrong

if print_output:

print(completions)

# Return the first choice's text

return completions.choices[0].text

I have tried to make the code above fairly well-documented, but let’s summarize it briefly:

The code takes some user_text and passes it along to the openai object, along with a series of parameters (which we’ll cover shortly).

This code then calls to the OpenAI completions API and gets back a response that includes an array of generated completions as completions.choices. In our case, this array will always have 1 completion, because n is set to 1.

temperature might seem unusual, but it refers to simulated annealing, which is a metallurgy concept that is applied to machine learning to influence the learning rate of an algorithm. A low temperature (near 0) is going to give very well-defined answers consistently while a higher number (near 1) will be more creative in its responses.

I want to highlight the max_tokens parameter. Here a token is a word or piece of punctuation that composes either the input prompt (user_text) or the output.

This means that if you give GPT-3 a max_tokens of 10 and a prompt of “How are you?”, you’ve already used 4 of your max tokens and the response you get will be up to 6 tokens long at most.

Pricing and GPT-3

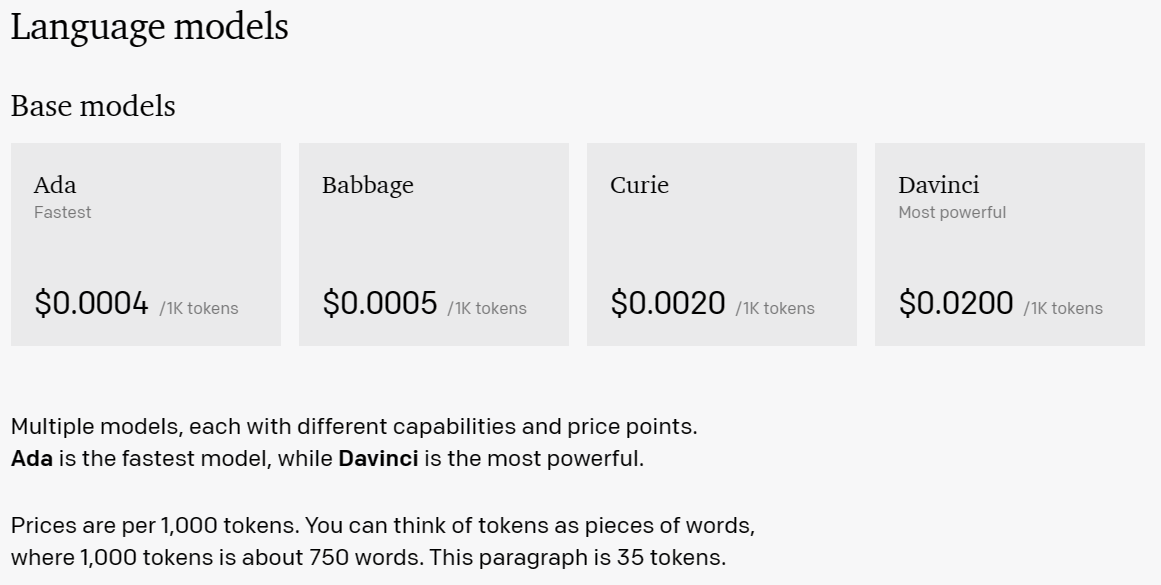

Next, let’s highlight the cost aspect of things. With GPT-3, you pay based on the quantity of tokens you send and receive from the API and depending on the engine you choose to use.

At the time of this writing, the pricing ranges from 4 hundredths of a cent and 2 cents per thousand tokens that go through GPT-3. However, you should always look at the latest pricing information before making decisions.

You have a few things you can do to control costs with GPT-3:

First, you can limit the max_tokens in the request to the API as we did above.

Secondly, you can make sure you constrain n to 1 to only generate a single draft of a response instead of multiple separate drafts.

Third, you can choose to use a less capable / accurate language model at a cheaper rate. The code above used the DaVinci model, but Curie, Babbage, and Ada are all faster and cheaper than DaVinci.

However, if the quality of your output is paramount, using the most capable language model might be the most important factor for you.

You can get more information on the available models using the models API.

You can also list the IDs of all available models with the following Python code:

models = ai.Model.list()

for model in models.data:

print(model.id)

Of course, you’ll need to search for more information about each model that interests you, as this list will grow over time.

Generating Text from a Prompt

Finally, let’s put all of the pieces together into a main method by calling our function:

if __name__ == '__main__':

prompt = 'Tell me what a GPT-3 Model is in a friendly manner'

response = generate_gpt3_response(prompt)

print(response)

This will provide a specific prompt to our function and then display the response in the console.

For me, this generated the following quote:

GPT-3 is a type of artificial intelligence model that uses natural language processing to generate text. It is trained on a huge dataset of text and can be used to generate natural-sounding responses to questions or prompts. It can be used to create stories, generate summaries, and even write code.

If running this gives you issues, I recommend you call generate_gpt3_response with print_output set to True. This will print the JSON received from OpenAI directly to the console which can expose potential issues.

Finally, due to high traffic into ChatGPT at the moment you may occasionally receive overloaded error response codes from the server. If this occurs, try again after awhile.

Closing Thoughts

I find the code to work with OpenAI’s transformer models is very straightforward and simple.

While I’ve been studying transformers for about half a year now at the time of this writing, I think we all had a collective “Oh wow, how do we put this in our apps?” moment when ChatGPT was unveiled.

The OpenAI API allows you to work with transformer-based models like GPT-3 and others using a very small amount of Python code at a fairly affordable rate.

ChatGPT currently reigns supreme for direct user-facing interactions, but GPT-3 lets us harness a similar level of power in our applications to draft responses, generate content for games and stories, and other creative endeavors.

I’m excited to see where transformers will take us in the future. If you’ve built something interesting with these APIs, I’d love to hear about it.