🎵 Do you want to build a Chatbot? 🎵

Exploring the history of chatbots, conversational AI on Azure, and how ChatGPT changes everything

Cover image created by Matt Eland using MidJourney

This article is part of Festive Tech Calendar 2022 and was originally intended to be a high-level overview of the conversational AI capabilities in Azure. However, in late November of 2022 ChatGPT released and completely changed our expectations of conversational AI.

Instead, we’ll keep the festive theme and look at chatbots through 3 lenses:

- The ghost of chatbots past - the history of conversational AI from ELIZA to ChatGPT

- The ghost of chatbots present - what we can currently do with conversational AI on the Azure Platform

- The ghost of chatbots future - where I think conversational AI needs to go in the future

By the time we’re done, you should have a basic understanding of where we are on conversational AI at the moment and where I think we need to head as an industry.

The Ghost of Chatbots Past

As a child of the 80’s, I’ve had the privilege to play with many different conversational AI systems as I grew up. Each one of these added something new to what we thought was possible, so we’ll speak briefly on each one.

ELIZA

While the original ELIZA program was created in the 1950’s, I still remember playing with a version of it as a kid.

ELIZA was an attempt to simulate intelligence from a computer using a clever trick: since understanding the user was hard, the chatbot could be more convincing if it pretended to be a therapist and responded to anything the user said with a question about that statement.

It was an interesting trick and it got people talking, but after a few interactions it was clear that ELIZA was just a clever way of parsing sentences and responding to them.

Still, I think ELIZA was a significant factor in society dreaming of later advances in conversational AI.

There are a number of online versions of ELIZA if you’re interested in seeing what it was like to chat with Eliza.

Personal Assistants

A decade ago the world changed when personal assistants like Siri, Alexa, Cortana, and Google Assistant hit the market.

These services lived in consumer devices such as your cell phone, affordable Echo Dot devices, or even the Windows taskbar. This made conversational AI widely available to the general public for the first time.

Additionally, these offerings improved what was possible with conversational AI in a few key ways:

- These systems could understand the user’s spoken words as text

- These systems could synthesize speech from their own text output and reply aloud

- These systems could more intelligently map what the user was saying to things the device knew how to accomplish

These factors combined to make conversational AI much more accessible to the average person with access to a cell phone or computer. Additionally, the capabilities of these systems were able to grow significantly over time by looking at common things users asked the systems to do that the systems could not do yet. Additionally, many of these systems offered APIs that let developers integrate their own offerings into the conversational AI platform.

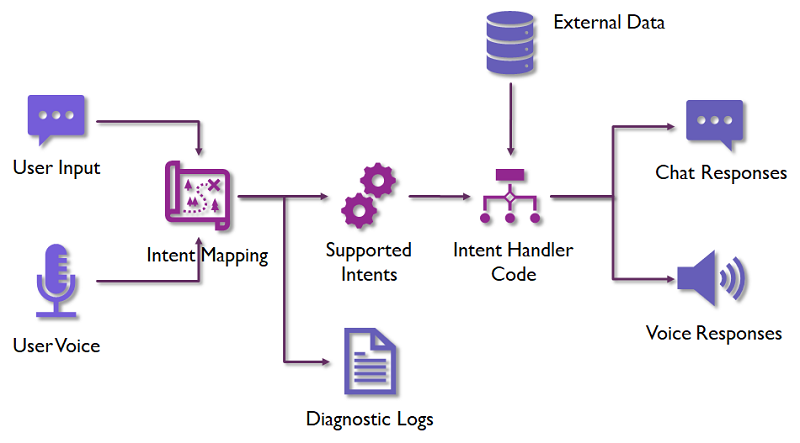

At a high level, these systems worked in the following way:

- The user says something to the device, typically starting with a wake word like “Alexa” or “Hey Google”

- The device uses speech recognition to translate those words to a string of text called the user’s utterance

- The string of text is then sent to an API that tries to map it to a supported intent that the system provides

- Once an intent is identified, any relevant entities are extracted, such as specific places times the user might have mentioned

- The intent receives the relevant entities and generates a text reply

- That text reply is then converted to speech using some form of speech synthesis

- The user hears the spoken reply

If you’re interested, I go into this process in significantly more depth in my How Chatbots Work article from earlier this year.

These conversational AI assistants continue to be relevant and already provide value, however they are not necessarily driving revenue the way their organizations may have hoped and they are consistently the focus of concern, mostly related to the always-on microphones that some use as they wait for their wake words.

Additionally, these systems have some technical difficulties in a few key areas:

- It is hard to remember context from request to request and support longer conversations

- Dealing with nuance or tone in messages is harder for these systems

- Complex multi-part messages are difficult for these systems

Keep these limitations in mind because these things are things that ChatGPT is actually good at as we’ll discuss later on.

Web Help Agents

Seeing the success of conversational AI on consumer devices, a growing number of organizations have sought to integrate chat into their applications.

This is typically done for a number of different reasons:

- Helping users find what they’re looking for

- Reducing the impact on support by trying to resolve issues for users before support is involved

- Reducing the number of employees needed in the support department

- Increasing sales leads

- Attempting to look “modern”

The net result of this is that the majority of Software as a Service (SaaS) organizations now have some form of chatbot on their website that pesters you to interact with it:

This technology has also made it into the voice support channels with automated AI systems triaging incoming calls.

Frankly, these systems can be more annoying than anything else when done purely to cut costs. This in turn leaves the consumer world feeling like its falling deeper into a dystopian AI hellscape as half-baked AI implementations act as gatekeepers to real help and support.

Incidentally, the phrase “dystopian AI hellscape” is not one I thought I’d write when I set out to create this piece, but here we are.

ChatGPT

ChatGPT was made publicly available in late November of 2022 and it changed what we thought was possible for conversational AI systems.

ChatGPT uses transformers trained on large bodies of text. These transformers are a newer innovation in AI within the last 5 years of writing and essentially enable a greater degree of context-awareness for where text is in sentences and paragraphs when training these models. This in turn allows the models to be effective when trained on larger bodies of text.

As a result, transformer-based systems can generate convincing text, code, and even images from a textual prompt. Not only that, but they can take in more contextual and nuanced queues in a larger conversation, respond to multi-part requests, and follow-on queries effectively.

In short, transformers make ChatGPT really good at something that traditional conversational AI was really bad at: generating dynamic replies that reflect the user’s full question, tone, and the context of the conversation.

However, ChatGPT has some severe limitations:

- It is difficult to determine why ChatGPT responds the way it does or what specific sources it is using to reply to a question

- ChatGPT will confidently make mistakes, generate broken code, and other flaws without any awareness of its shortcomings

- ChatGPT does not integrate with external APIs or company-provided data sources

I expand upon many of these flaws (and others) in my longer article on What ChatGPT means for new devs.

Still, even with these severe limitations, the sudden popularity of ChatGPT and its success at generating compelling responses has changed the public expectations of conversational AI. This is now the bar that our systems must meet or exceed in the future.

The Ghost of Chatbots Present

Having covered the history of chatbots and how ChatGPT absolutely changes the expectations we have for chatbots going forward, let’s take a moment and explore the technologies currently available on Azure for chatbot development.

So lets talk a bit more about what our current systems can do. While they are not as effective at generating dynamic content through the magic of transformers, our current systems are doing better at understanding the user’s intent.

Conversational Language Understanding

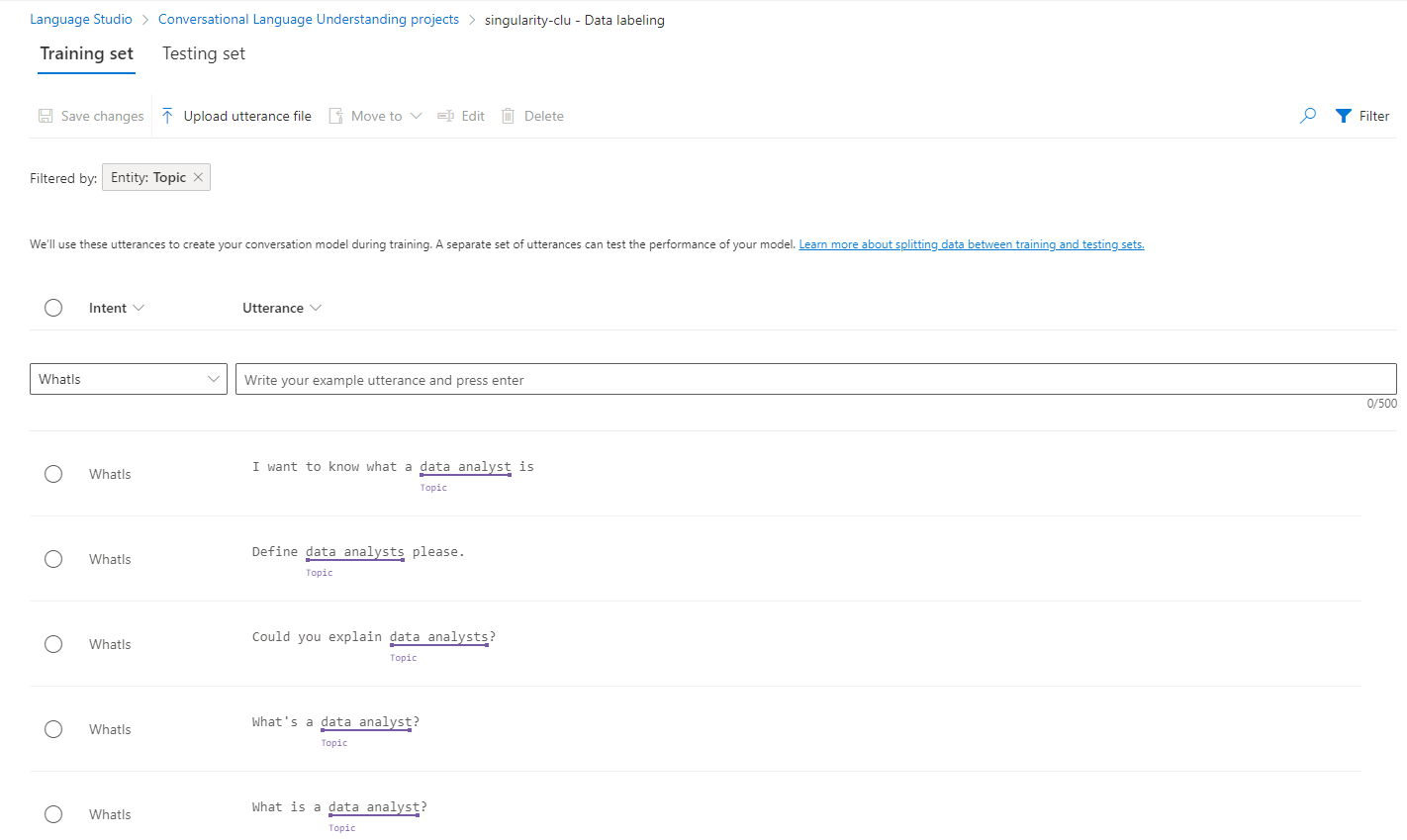

Conversational Language Understanding (CLU) is the successor to Azure’s Language Understanding (LUIS) service.

CLU is a pay-per-usage mechanism that can map a user’s utterance to a supported intent.

With CLU you provide a number of supported utterances to the system for each intent and then CLU effectively acts as a classification machine learning model that classifies new utterances to any intent that the system supports (including a “None” intent for statements that could not be mapped to a supported command).

CLU also supports pattern matching for extracting out entities from utterances. These entities are typically people, places, dates / times, or topics of interest.

For example, let’s look at a sample utterance:

I would like to make a reservation for 7 PM at your downtown location

This utterance would likely map to a Make Reservation intent, but that intent would want to know a few pieces of information the user already provided in their message.

We can map a few things the user said into entities for this intent:

- 7 PM would be the date and time that we’d like the reservation (since no date was specified, today would be implied)

- downtown location would be a location entity that your app might support

If you register some utterances as having entities associated with them, these entities are automatically identified via CLU and passed on to your code that later responds to that intent.

This allows the code you write to prompt for entities that were not provided (e.g. “What time is this reservation for?") or respond intelligently to the ones that are present.

CLU, and systems like it, do have a few weaknesses, however:

- You need to have a decent number of utterances already available for the intents you want to add

- Like any multi-class classification model, if you have a large imbalance in the number of utterances per intent, your app might not be as effective identifying intents with few utterances

- Sometimes users might say complex things that might be appropriate to map to multiple intents

- Your application may falsely map an utterance to an intent it doesn’t belong to. I recently interacted with a system where I was requesting an E-Mail address to contact someone and the system proceeded to start a request to change my E-Mail address!

- At a pay-per-usage model, I find myself less willing to write unit tests around code that integrates with services like CLU

Note: If cost is a factor, it is possible to emulate some of CLU’s capabilities by writing your own multi-class classification model. Take a look at my article on using ML.NET 2.0 to perform text classification for some ideas there.

Azure Cognitive Services

Azure Cognitive Services provide a few interesting AI as a service offerings beyond CLU & LUIS that can be helpful for conversational AI:

- Speech to Text allows AI systems to translate spoken words to raw text in a variety of languages.

- Text to Speech allows AI systems to speak to you in a human-like voice using neural voices in a variety of languages and dialects.

- Language detection allows AI systems to recognize what language is being used

- Sentiment Analysis allows AI systems to determine if the words someone is using are likely conveying a positive, negative, or neutral sentiment

- Entity recognition allows AI systems to extract named locations, people, things, and events from text

- Key Phrase Extraction allows AI systems to summarize long text (like this article) into a set of key phrases

See my articles and videos on Azure Cognitive Services for more on what we can do with Azure Cognitive Services, but these APIs allow us to integrate AI capabilities into our applications with minimal effort.

Azure Cognitive Search

Azure Cognitive Search may seem out of place in an article on conversational AI, but I do believe that chatbots are really often a form of conversational search. You’re interacting with a virtual agent looking for some piece of information or looking to accomplish some task.

Search can help with that. If a bot encounters something it is not programmed for, a possible solution would be to search a series of documents or other sources of information you’ve registered with Azure Cognitive Search and see what responses you get.

That way your bot can say “I’m not able to handle that request, but I found a few resources that may meet your needs” and list the top few results of an internal search.

Azure Cognitive Search is more than just search in that it can extract meaning from PDFs, images, and other encoded forms of data. This allows it to transform the documents it searches to make them more relevant and searchable.

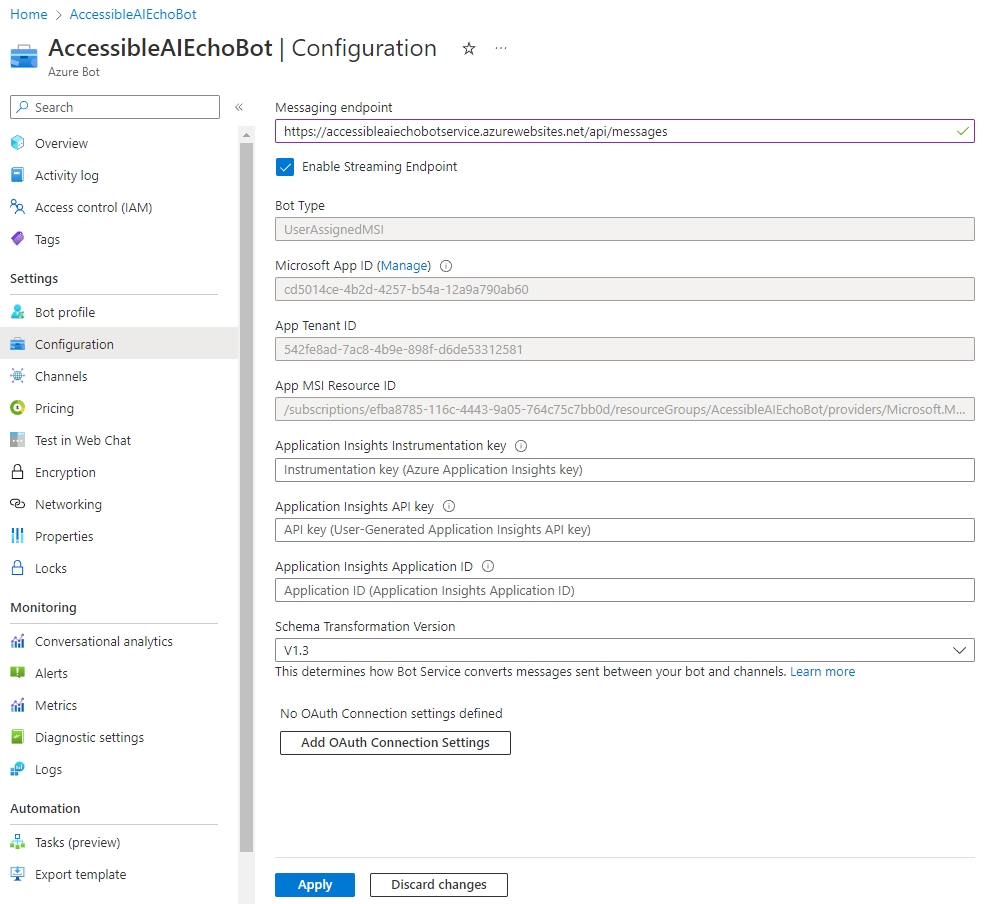

Azure Bot Services

Azure Bot Services are a way of integrating your chatbot into Microsoft’s multi-channel bot interaction ecosystem. This allows your chatbot to connect to various channels including text, voice, Slack, Teams, web user interfaces, and Amazon Alexa skills.

An Azure Bot Service is not an application in of itself, but a registration that allows a bot at an endpoint you configure to communicate via any of these channels it desires.

Typically if you are developing a bot using Azure Bot Service you will use either Microsoft Bot Framework or Bot Framework Composer. The resulting bot will then be deployed as an App Service that your Azure Bot Service will integrate with by pointing it at a specific endpoint.

Microsoft Bot Framework

Microsoft Bot Framework is a SDK for integrating with Azure Bot Service.

Bot Framework lets you write what is effectively an Web API application to take in requests and generate responses to the user however you’d like.

Typically Bot Framework applications will take advantage of Conversational Language Understanding, Azure Cognitive Search, external APIs, and your organization’s code and data sources.

These applications can be involved to set up and configure, which is why I have a specific article just for setting up a Bot Framework app in C#. However, this complexity gives you a lot of power and control in responding to requests.

While contextual information is available using Microsoft Bot Framework, any carry over of context from message to message must be coded in by the developer.

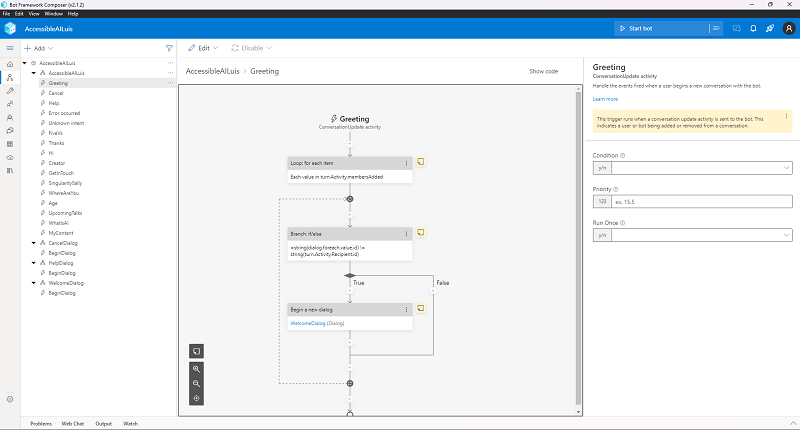

Bot Framework Composer

Most of the time organizations want to offer a chatbot and have someone other than a developer control how it reacts to statements.

Microsoft understands the complexity involved in creating chatbots and so gives us the Bot Framework Composer to help simplify this process.

Bot Framework Composer is a graphical user interface to create and test chatbots using flowcharts with different series of nodes per intent that your application supports.

Bot Framework Composer bots are compatible with Azure Bot Service and can be deployed to Azure the same way a Bot Framework bot can.

Unfortunately, my experience with Bot Framework Composer has been largely negative with it not supporting the types of things I need it to (often requiring custom code) and I’ve had a large number of errors attempting to provision or even deploy applications. Support was largely unhelpful with these issues as well, informing me in August that there were open issues for the things I’ve reported and then declining to assist further when checking in with me in early December.

As a result, I view Bot Framework Composer as a promising sign of whats to come and maybe not what is truly enterprise-ready at the moment.

Question Answering

Because writing chatbots can be difficult, Microsoft provides the Question Answering service. Question Answering is a successor to the old QnA Maker service much like CLU now replaces LUIS.

Question Answering allows you to provide knowledge base or frequently asked question documents to Azure that are then indexed via Azure Cognitive Search that can be responded to via conversational workflows using CLU.

Once you have a knowledge base you’re satisfied with, Question Answering then lets you deploy your trained bot to Azure Bot Service for users to interact with it.

This makes Question Answering a great way of getting started with a conversational AI system, but one that isn’t going to be able to respond to anything dynamic or integrate external data sources.

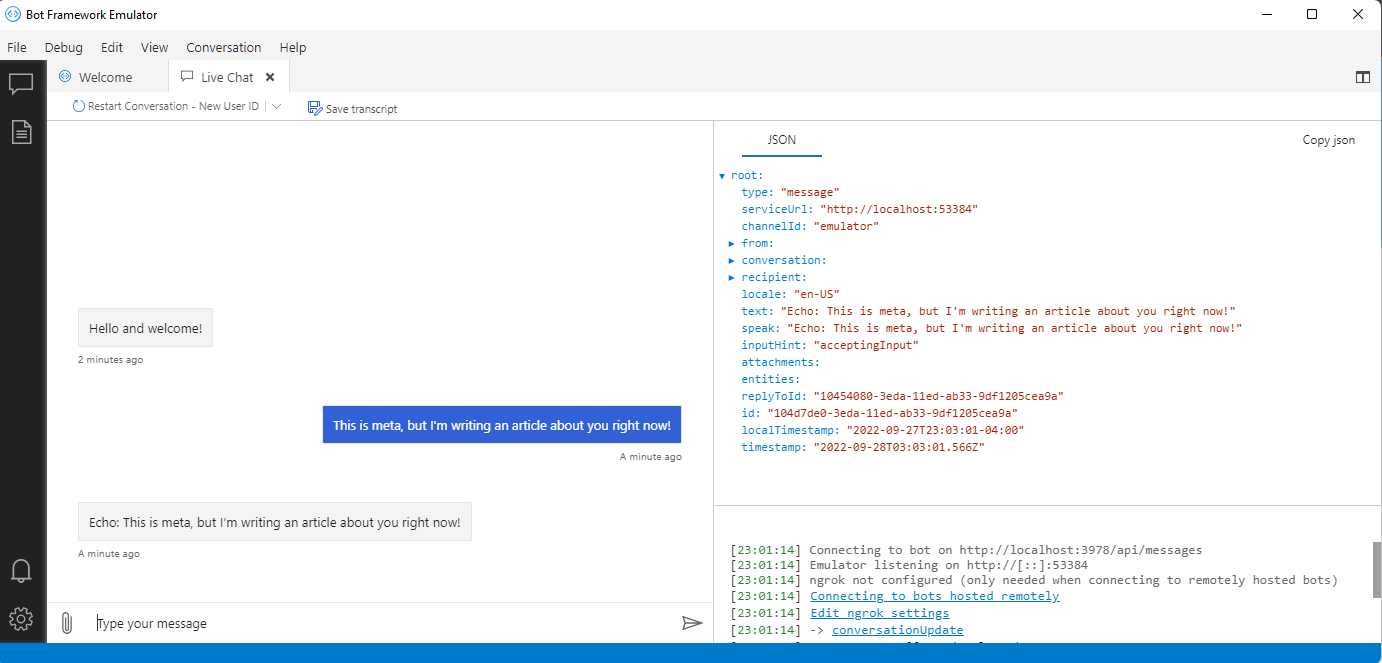

Bot Framework Emulator

One of the really interesting things about chatbots on Azure is the Bot Framework Emulator tool that lets you test a chatbot locally and get detailed information about the request the bot received and the response the bot generated.

This tool is invaluable for testing and debugging your applications and I strongly recommend it.

The Ghost of Chatbots Future: Where Conversational AI is Heading

So we’ve now seen a history of conversational AI systems and a rich set of current offerings. Let’s talk about where I believe all of this is heading.

First, ChatGPT changes everything. Bots that were unaware of conversational context or unable to handle nuanced messages from users are now suddenly a lot less acceptable to the general user now that they’ve seen what transformer-based systems like ChatGPT can do.

However, these transformer-based systems are not trained on your organization’s data, may be trained on data you don’t want to include responses from (including potentially competitor’s materials or copyrighted materials, despite claims to the contrary), and may completely misunderstand your users true needs.

Current language understanding systems work well, are controllable, and map user inputs to things the bot can understand and accomplish, but may miss nuance from users and struggle with the larger context of a conversation.

I believe that these systems should eventually merge. I believe that traditional conversational AI systems can potentially take advantage of transformers to generate rich responses to user messages.

I believe that transformer-based models will eventually gain the ability to cite the sources used in generating their end results. I also believe we will innovate new ways of controlling and constraining these systems to produce responses more in line with organizational needs and goals.

OpenAI, the organization responsible for ChatGPT, DALL-E and other models, is already integrating into Azure via the OpenAI service. I believe it is only a matter of time before OpenAI starts merging more and more into the rest of Azure Cognitive Services and specifically conversational language understanding.

Closing Thoughts

So this is the shape of what was, what is, and what I hope is soon to come.

Keep in mind that transformers are only 5 years old whereas image classification, the core innovation that powers self-driving cars, is 25 years old. While conversational AI has been around since ELIZA and the 1950s, it truly feels like we’re back on the frontier where innovations may come suddenly and iterate rapidly.

Our old way of doing things via utterances to intents and back to the same piece of text for every intent is no longer cutting edge and users will notice. However, the intent mapping approach is not dead, but rather has some opportunities to integrate with the exciting and powerful transformer-based way of doing things.

I’m personally very excited to see where things will go and still plan on developing some traditional conversational AI applications in the new year. I hope you’ll join me.