How Chatbots Work

Understanding Conversational AI Systems without a PHD

Chatbots like Alexa and Siri are not magic, nor are they powered by a human-like supercomputer in a research lab. Instead, conversational AI uses a series of clever algorithms to understand, process, and respond intelligently to user commands.

In this article we’ll take a look at the high-level parts that make up a conversational AI agent so that you can understand the different components in such a system, how they interact, and the special design, development, testing, and maintenance considerations that these innovative systems offer.

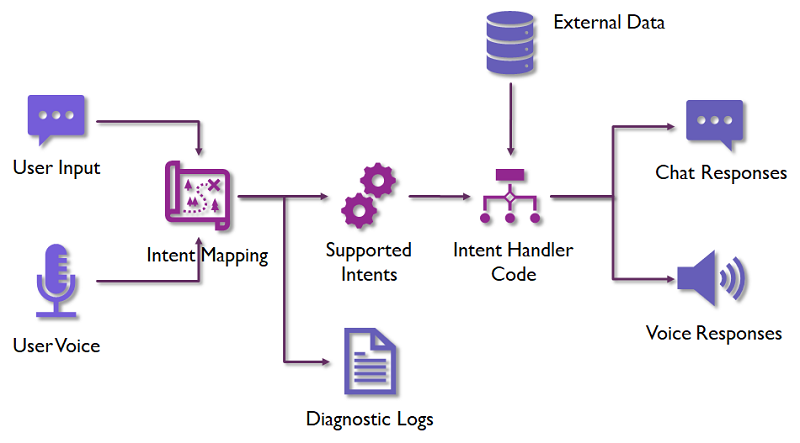

Parts of a Chatbot

Chatbots are large and intimidating systems, but they can be broken down into individual components.

Here are a number of layers or capabilities that are common to most chatbots:

- Channels such as text, voice, Slack, and Teams for users to interact with the bot

- A set of supported intents that have been built into the bot by developers

- A way of mapping the user’s input to the most likely intent based on their utterance

- Ways of providing additional context or data to these commands

- Optional databases or APIs that can give live data to supported commands that need it (e.g. weather data)

- Language generation capabilities to generate text

- Methods of responding to the user with visuals, spoken words, plain text, or a combination of these

- Diagnostic components to help improve the bot over time

Each one of these tasks is solvable with a narrow branch of artificial intelligence, but together they work together to achieve something more.

Getting input through Speech and Text

We use speech recognition (also called speech to text) to understand spoken commands to an AI system and recognize them as a string of text. The system will need to understand people with a variety of ages, genders, ethnicities, and speech patterns.

Of course, speech recognition only applies to systems where a user can talk to the AI agent. In most conversational AI systems interactions are accomplished in text with the user typing in words. This carries its own share of obstacles as people often use different conversational patterns and abbreviations when texting or sending direct messages. Systems supporting text input have a heavier burden in the interpretation stage later on because they must be able to handle shorthand, typos, emojis, spelling, and grammatical issues a user might type in.

Additionally, on some chat channels it is possible to send links, pictures, or other attachments and conversational AI entities must be able to respond appropriately. Fortunately, telling users these actions are not supported and redirecting them to supported workflows is often sufficient.

Mapping Utterances to Intents

Next, the system needs to match the user’s words to some known text associated with a command the system can perform. In conversational AI terminology we refer to the user’s words as the utterance and the supported commands as intents. Since there are many different things a user could type or say there are a large number of utterances that should map to a single intent.

There are a number of different techniques for matching utterances to intents, but all of them fall under the umbrella of natural language processing (NLP). One popular technique involves training a multi-class classification machine learning model. This offers a wide degree of flexibility, but requires a sizable amount of training data. Another implementation is to use text comparison metrics such as Levenshtein distance.

Chatbots typically evaluate an utterance against multiple intents and determine a confidence score that the user was referring to each possibility. If the confidence score is over a threshold then the intent with the highest confidence score is executed. If no intent had a sufficient confidence score, the system typically maps that to a None intent which tells the user that they are confused and don’t know how to respond.

It’s worth noting that with machine learning in general there is typically a lot of “noise” or areas where the system may guess and get things wrong. If you’ve ever talked to Alexa or Siri and watched in horror as she started executing an intent other than what you wanted, you’ve witnessed this firsthand.

Entities and Dialogs

Once an intent is recognized, additional context is sometimes needed such as prior conversation history, variables associated with the user, and specific entities contained in the utterance such as a time or location.

For example, the utterance “Book a reservation at the concert hall for next Monday” would might have entities for concert hall and next Monday that could be provided to custom code to handle booking the reservation.

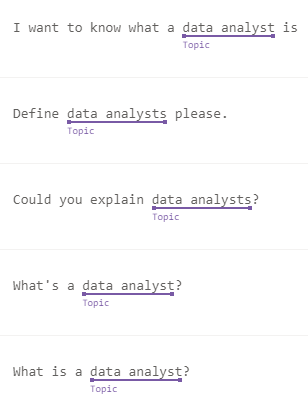

The image below illustrates a set of related utterances with a Topic entity tagged in each of them in Azure Language Studio:

Notice that the phrasing and location of entities may be different in different utterances depending on how users structured their sentences.

Sometimes chatbots will need to use a series of interactions on a guided workflow to accomplish a larger task. These interactions are parts of larger dialogs. For example, when reserving a spot a system might need to know the date, time, location, and number of people to reserve tickets for. This could require multiple prompts to the user and understanding and interpreting those prompts while still holding on to the larger context of the conversation.

Language Generation

Intents that are frequently executed can become quickly demystified if a system responds with the exact same response every time. Instead, language generation can be used to build dynamic responses to users and even incorporate aspects of their original utterance back in the response to a user.

At its most basic, language generation could be as simple as rotating responses to common queries so users don’t see the same responses frequently. However, more complex examples of language generation could respond to the emotional sentiment or tone behind the user’s original statement or phrase the response to more naturally fit into the flow of conversation.

For example, given a user prompt of “Should I bring my umbrella tonight?" that was mapped to a RainForecast intent, the following would be a perfectly valid chance:

“At 2 AM there is a 15 % chance of precipitation for 10 minutes. Tomorrow expect overcast clouds and an 80% chance of rain.”

This answers the question, but the user has to do some digging to map the response to their question. A better response with more advanced language generation would be the following:

“You probably won’t need an umbrella unless you’ll be out at 2 AM where there’s a slight chance for a brief shower. Tomorrow you should definitely bring an umbrella since it’s 80% likely to rain.”

Note that this response is feasible with the same information and a lot more programming to understand the user’s original intent and respond to address that need.

Responding to the User

Finally, let’s talk about how systems respond to users.

Once we have raw text that a user should receive back, this information needs to be transmitted back to the active conversation in a reliable manner so that the user gets a reply to their query. Chatbot processing typically happens over the internet with an external application receiving requests via an API and sending responses back to the device or application that asked the question.

For applications that must talk aloud to the user, speech synthesis or text-to-speech is used to respond to the user with that information. This is a delicate area as speech synthesis has historically sounded robotic, but we’ve made a surprising amount of progress.

Notably, Microsoft Azure has a wide range of text-to-speech voices of different genders and ethnicities. Additionally, Azure offers a number of neural voice profiles which use deep learning to generate more human-like speech patterns.

Once a user has received their reply, they are free to make a subsequent request and start the cycle all over again.

Media and Multi-Channel Support

For systems that don’t offer voice feedback, text can often be enhanced with formatting through markdown or other formats and accompanied by visuals. For example, the Echo Show displays a weather forecast on the screen while responding with a voice summary of the weather conditions.

This approach helps augment and enhance the user’s experience interacting with the bot, though too many visuals can be distracting and take away from the flow of conversation.

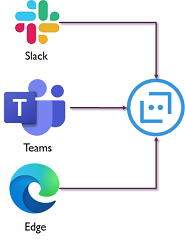

Speaking of channels, it is becoming more common for organizations to build chatbots targeting multiple platforms. For example, the same bot code could serve a bot embedded in a web page as well as a bot you could chat with via a platform like Slack, Teams, or Meta. In fact, bots can even support a REST API or even voice channels such as a telephone line.

There are a wide variety of channels a bot might potentially support but the specific supported channels will vary by the technologies you use.

Other Considerations for AI Systems

While we’ve covered the primary components of chatbots and how these fit together, there are some less visible aspects of conversational AI that we should cover.

Developing a chatbot is hard (trust me, I’m building a few at the moment!) and it requires special considerations in some areas compared to the development of traditional applications.

Planning a Chatbot

Planning out a chatbot is all about defining scope, boundaries of the system, and the overall user experience. You must decide on whether the bot will allow the user to query over a broad range of topics with a great degree of freedom or if your bot should be narrowly focused on specific tasks and workflows such as ordering food or opening a support ticket.

Defining a list of supported intents and identifying sequential dialogues that the bot may perform is going to be key to helping define the scope. You’ll also need to determine what information, if any, the bot will need to track about the user from request to request. For example, do you need a customer ID or need to know if the user is from a specific state? You’ll need to factor these variables into many requests if the answer is yes.

You’ll also need to determine the bot’s personality and tone and determine how it should phrase things particularly when asked to do things it does not support or cannot understand.

Finally, some sample transcripts are likely a good idea for each feature you’re developing. These sample utterances and replies can be directly used to fuel development and can help alternative interactions become more apparent to the development team.

Logging Chatbot behavior

One of the things you will want to strongly consider is logging utterances that were sent to your bot. Because there are so many ways of phrasing things in any language, it’s going to be impossible to program your bot with every phrase your users may think of typing in. Because of this, knowing what things people are asking your bot can help you identify any weak areas in your bot’s ability to map utterances to intents.

Additionally, if people commonly ask your bot for features you don’t support, you may want to add support for those features in a subsequent release.

Obviously there are big privacy concerns here and you’ll want to anonymize data wherever possible and inform users that the things they’re typing into the bot may be reviewed by the development team.

This privacy factor is critically important if users are communicating with your bot via voice. It can be very easy for people to interpret “We store the text you say to our bot” as “We record all audio from the microphone”. The former is often viewed as fairly acceptable or common sense, but the later is interpreted as a huge breach of privacy.

Testing Chatbots

Testing chatbots is hard because there are so many things you could say. However, if you have been logging interactions with your bots, you can take some of the actual utterances your bot has encountered and use them to test your bot in manual or automated test conditions.

It is critically important to have consistent and repeatable automated tests for the language understanding layer of your chatbot. As chatbot projects progress, the number of utterances and intents grows over time. This makes it harder to manually test all features of a bot when making changes.

Due to the nature of machine learning models and different utterances competing for attention, you may discover over time that an utterance that once cleanly mapped to a correct intent now maps instead to a new intent due to a new utterance you added.

Because of this, it is important to provide validation data when evaluating machine learning model performance to see what intents it has trouble recognizing. Adding additional utterances to those intents can help with this, but may also cause problems with intent resolution for other intents and utterances, so testing and tweaking chatbots is an iterative process.

It is my strong belief that any chatbot platform should be built with a strong layer of test driven development for matching intents and utterances together and there should be a growing number of automated tests around different utterances and intents. As a result, you should strive for a conversational AI platform that is cost effective for automated testing.

As a practical example, I love Azure Cognitive Services and the suite of capabilities it offers, but it costs me about a tenth of a cent every time I use conversational language understanding to resolve an utterance to an intent. While this is a trivial amount of money, when you put it in an automated test suite pretty soon I’m paying a nickel every time I want to run my tests, then a quarter or even a dollar. I am a firm believer that there should never be anything that motivates you to not run unit tests, whether it is the performance, reliability, or cost of those tests, so I’m now exploring other options for intent resolution, including Levenshtein’s distance.

Agile Development for Chatbots

Development of chatbots lends itself well to iterative development and agile where early sprints can be focused on prototyping the bot and then subsequent sprints can focus on individual intents, dialogs, and flows through the system.

I plan to write more on the use of agile and Kanban for delivery of chatbot functionality in the future, so stay tuned for more practical examples of this.

However, keep in mind that users typically try something once and then form a judgement about it. Once this judgement is formed it can be hard to convince a user to give it another try, even if significant progress has been made.

This is important because there’s a huge temptation to release chatbots before they are fully ready in order to collect additional utterances from users since the more utterances you have, the better you can train your bot.

It is better to have a solid core of working features in related intents in your bot for its initial wide release than to announce a bot that only supports one or two intents. Don’t ask me how I know.

Maintenance and Deployment Considerations for Conversational AI Solutions

Because conversational AI solutions are typically distributed systems with data and file storage components, a web service, integrations into platforms like Slack and Teams, and even external APIs and resources for language understanding and speech, it can be challenging to orchestrate new releases.

When developing chatbots, you should have multiple copies of resources like databases and machine learning models for each environment. That way you can develop against one environment, then update the testing environment’s resources, and finally the production resources.

Failure to properly do this can lead to cases where a new intent is added in your language understanding layer that your bot in production now sees as a valid possibility, but doesn’t have any code to respond to this new intent with. Additionally, adding new capabilities to your bots data is great, but if the web service doesn’t support them yet, you can encounter errors or other confusing factors for your users.

Thankfully, most development teams are used to environment maintenance and using separate database resources per environment, so this should just be an example of applying old knowledge to new domains.

Ethical Considerations in Chatbots

Earlier I touched on the privacy considerations in chatbots and these are certainly a big aspect of ethics in chatbot interactions, but there’s more to it than just privacy.

In a future article I’ll be detailing more of the ethical issues that can arise from a virtual assistant that can receive a multitude of responses, but to tease a few ideas, you must think through how your bot will react to the following types of scenarios:

- What if the user insults your bot or is using hate speech?

- What if the user expresses serious depression or even suicidal intent?

- What if the user confesses to a crime or is planning a crime?

- Can there be any negative consequences of an employee talking to a bot run by their employer?

- Can there be any negative consequences for a customer talking to a company-owned bot?

Some of these things we have firm answers for, while others we’re still figuring out as a society, but these are important questions to consider.

Conclusion

As we’ve seen, chatbot projects are not traditional software development or traditional data science projects, but a unique type of project with its own challenges and opportunities.

The good news is that chatbot systems can be broken down into individual systems and subsystems and the types of interactions a user can have with bots can be developed one at a time.

The bad news is that chatbots bring with them a wide range of planning, user experience, testing, deployment, and ethical considerations that must be thought through and methodologically addressed in order to have a good result.

Ultimately, however, chatbot projects can be exceptionally achievable and valuable ways to innovate and deliver value to your users.