How Transformers Write Stories

Exploring Fabled.AI's transformer-based story and art generator

Cover image created by Matt Eland using MidJourney

For as long as I can remember, I’ve heard chatter and speculation as to whether or not artificial intelligence will ever be able to write compelling stories. I tell you that through the “magic” of transformers they now can.

Let’s explore Transformers through story generation with Fabled.AI’s transformer-based story generator.

This content is also available in video form on YouTube

Exploring Fabled’s AI Story Generator

Fabled is a surprisingly powerful tool that accepts short prompts and style parameters and uses them to generate short stories with clear beginnings, middles, and ends and occasionally helpful related graphics.

You start by giving it plain English text on what you want it to generate. You then also specify the complexity of the language used and the style of the images generated.

Note: Fabled.AI seems to be aimed at parents trying to generate bedtime stories for their children that are custom-tailored for the child’s interests. As someone who has worked with preschoolers for over 20 years, I love this idea.

After that, Fabled will generate a story for you. For me, this took about 2 minutes time, if that.

Once that is complete, the story will appear in your library alongside your other stories:

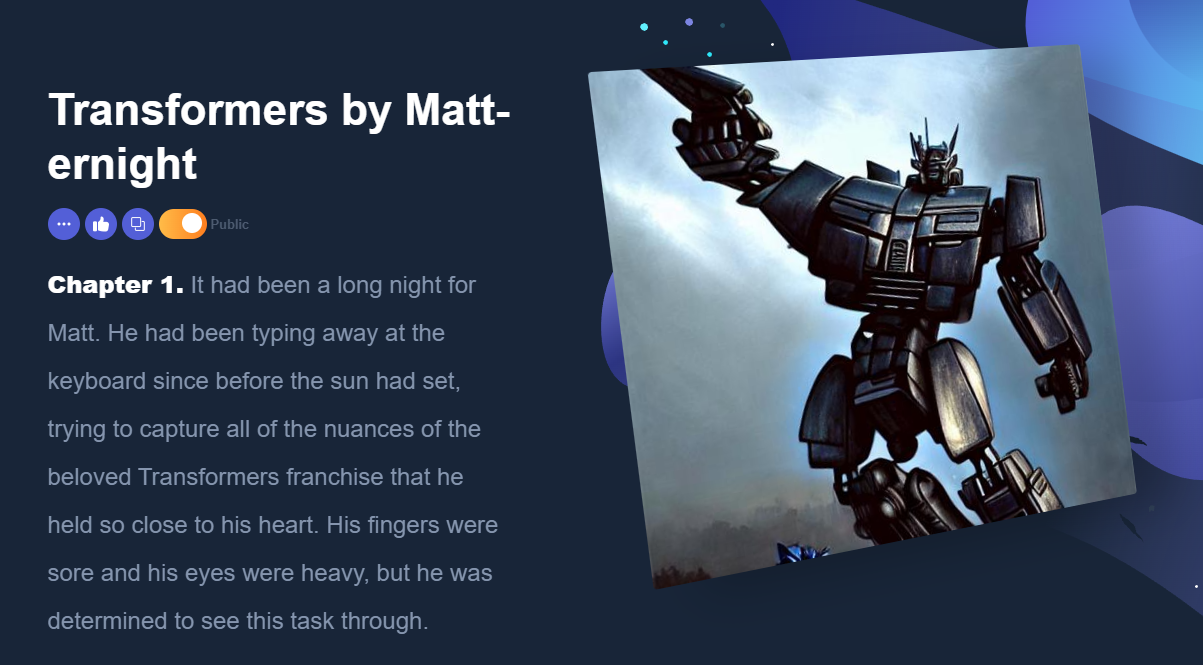

In this case, it appears to have taken the instructions on writing about Transformers quite literally as it featured a large robot stylized after the Transformers cartoons / TV shows / movies.

And sure enough, the story it generated discussed Matt trying to figure out how to take the Transformers franchise and put it down in article format.

The story it tells has a clear beginning, middle and end:

- Matt is trying to capture the nuances of the Transformers franchise for others to understand

- Matt sums up what Transformers means to him internally and why he liked it so much

- Matt writes about it, names it, and publishes it

- People like it and start pouring on reviews and praise

- People publicly doubt that anyone will ever surpass this article

The story is quite certainly heaping on the praise very heavily at the end there, and this has been common for all stories I’ve seen it generate. However, keep in mind that this is a generator built for young children dreaming of their futures. Such a story generator is probably not going to end a story in the style of Empire Strikes Back.

Observations about the Generated Story

Now, there are a few interesting things about this seemingly-simple story that feel clever to me.

First of all, there’s the disambiguation of the word Transformer. Of course I meant to refer to the transformers use in machine learning, but it could have referred to electrical transformers or the transforming robot franchise as well. Since this tool is built with children in mind, the assumption that the generator made makes a lot of sense.

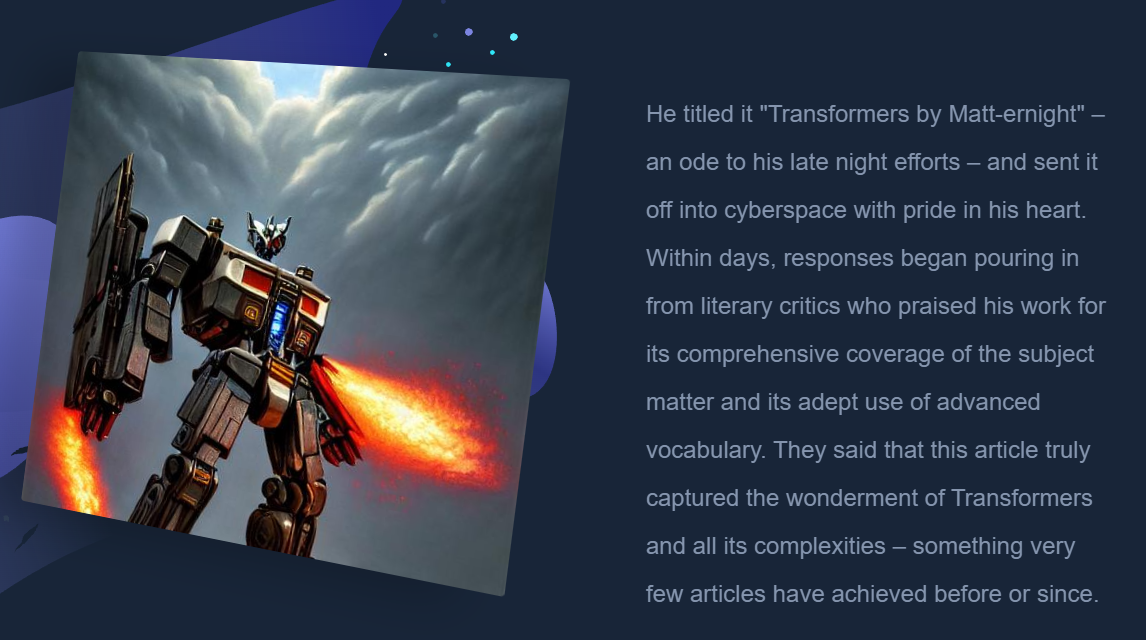

But the tool didn’t just stop there. Several paragraphs exhibited knowledge of the Transformers intellectual property (which I make no claims to, by the way):

- The second paragraph references movies and TV shows indicating some understanding of what Transformers is.

- A number of paragraphs evoke the popularity of the show, indicating some awareness of its success and draw

- The third paragraph accurately depicts the robots changing into jets or cars as well as their core values

- The fourth paragraph attempts to name the story with the suffix “by Matt-ernight” and cites this is “an ode to … late night efforts”.

There’s enough things in here to show me that the generator appears to have some understanding of the story’s context - the goal of the character, the late night writing session, and the show Transformers itself.

In fact, there’s a quote to me that seems to accurately sum up the franchise:

It was more than just robots changing into jets and cars - it was about heroism, morality, friendship and loyalty.

Not only is this an accurate summation, but I couldn’t find any sort of mention of this text or anything close to it in a cursory Google search for plagiarism detection.

So how does this work?

Making Hypotheses about how Fabled’s Transformers Work

I’ve reached out to the wonderful folks at Fabled.AI, and while they were willing to confirm that they’re using transformers to power their solution, they declined to delve into technical details for understandable reasons.

So let’s talk about how a system like Fabled might work.

Side Note: Dear folks at Fabled.AI, if you’re reading this, I’m not advocating for anyone to compete with you, just using this as an opportunity for teaching. You all are wonderful.

A system that can take a string of text and generate a coherent story with illustrations has a few key problems:

- We need to figure out who is in the story

- The story needs a structure that can be broken down into key plot points

- The plot point each need a paragraph of text

- The text paragraphs need an accompanying image

- The story needs a title

- It all needs to be wrapped together into a single story

Entity Recognition

The first problem is fairly easy. Systems like Azure Cognitive Services and others have named entity recognition that can extract locations, names, dates, and other entities from text. If we see a person, that’s who our story is about.

In fact, entity recognition can tell us a lot about the story the user may desire. If we can analyze the major entities and verbs in the entry sentence, we could potentially use this to generate a list of key plot points.

Once a list of plot points were generated, each one could be fed in to a transformer to build a small paragraph.

However, I think it’s maybe more likely that the system generates the entire story up front, so let’s look at that process.

Generating Text with Transformers

The text generation part of this application would involve a transformer that had been trained on stories similar to the ones we would want to generate.

Since this application is intended for children’s stories, we could train a transformer on a wealth of books suitable for the target audience. Some sources of data are likely available via Project Gutenberg or other similar services.

You could potentially train multiple transformers following this methodology, but only show some books to some transformers. This would allow you to train a simple transfomer only on books with simple vocabularies and a more complex transformer on a larger range of books.

Once the transformer was trained, you can rely on that to generate text from prompts. This is a journey I’ve not yet gone on myself, but look to rectify in the near future, so expect some future content from me on this topic.

Generating Images with Transformers

Image generation is going to work similarly to text generation. With image generation, you’re training a transformer on images with textual descriptions of those images. To accomplish this you’d need a data source of tagged images to rely on.

Alternatively, you could just take in a data source of images and use an image classification solution like Azure Computer Vision to summarize images and identify entities in them with their bounding boxes using object detection.

Once you have this data source, you could potentially feed it the entire paragraph of text representing what you’re trying to illustrate. If you generated the text of each paragraph from a simple prompt, you could instead feed that prompt to the image generation transformer.

Fabled.AI allows you to specify the style of the images. This could be accomplished either by using separately trained transformers per style that you would like, or by having a single transformer and adding on descriptive strings to the end of the image description to enforce the style you want.

Generating Titles with Transformers

Building story titles from your stories is maybe one of the more impressive things that Fabled.AI seems to do, although I was unimpressed by the title it picked for the example story I used in this article.

I’m frankly not sure how this works. I imagine that just as a transformer might take a little bit of text and generate a lot of text, you could reverse that to take the full text of a short story and generate a title.

To me, this is one of the more interesting aspects of the output, with the clear narrative structure of the output being even more interesting.

Final Thoughts on Transformers

Regardless of the number, configuration, and training processes for its transformers, Fabled.AI is a remarkable tool for generating compelling short stories suitable for bedtime reading to children.

More than that, though, Fabled.AI is a sign of what’s to come through this remarkable technology where a human can specify an intent and partner with a machine to generate meaningful personalized output that has immediate value.

These technologies are not perfect and have some interesting questions to answer, but what we consider achievable has changed significantly in just a short period of time.