Managing your Azure Cognitive Services Costs

Tips for managing your Azure Cognitive Services expenses

In this article we’ll explore the pricing structure of Azure Cognitive Services and some helpful and creative things you can do to monitor, control, and reduce your Artificial Intelligence expenses on Azure.

While this article is a stand-alone exploration of cost management in Azure Cognitive Services, it’s also part two of a five-part series on controlling your AI / ML pricing on Azure. This series is also a part of the larger Azure Spring Clean 2023 community event, so be sure to check out the related posts.

This article assumes a basic familiarity with both Microsoft Azure and Azure Cognitive Services. I’ve written a general overview of Azure Cognitive Services as well as a number of targeted articles on individual services inside of Cognitive Services if you are curious.

I also need to issue a standard disclaimer on pricing: this article is based on my current understanding of Azure pricing, particularly in the North American regions. It is possible the information I provide is incorrect, not relevant to your region, or outdated by the time you read this. While I make every effort to provide accurate information, I provide no guarantee or assume no liability for any costs you incur. I strongly encourage you to read Microsoft’s official pricing information before making pricing decisions.

With that out of the way, let’s dive in and look at improving your Azure Cognitive Services costs.

General Strategies for Azure Cognitive Services Pricing

I like to think of Azure Cognitive Service’s pricing model as AI-as-a-Service. Azure Cognitive Services operates under a pay-per-use model, meaning that you pay a very small amount (typically fractions of a penny) for every call to the Azure Cognitive Services APIs.

This pricing model allows you to experiment easily with Azure Cognitive Services and get value out of small-scale prototypes, but the more you call the service, the more you pay.

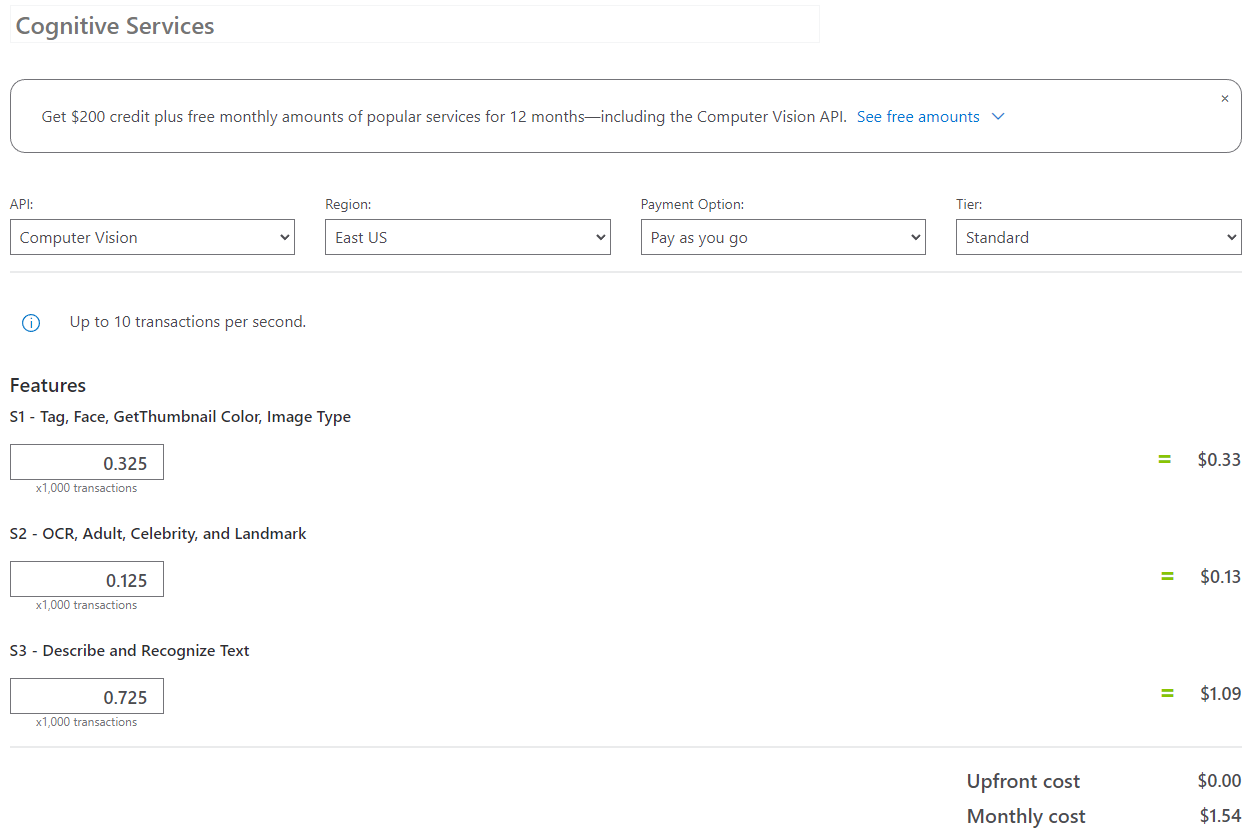

This means that it is important plan out your pricing needs carefully using the Pricing Calculator and then to monitor your costs carefully during prototyping to make sure they match your expectations.

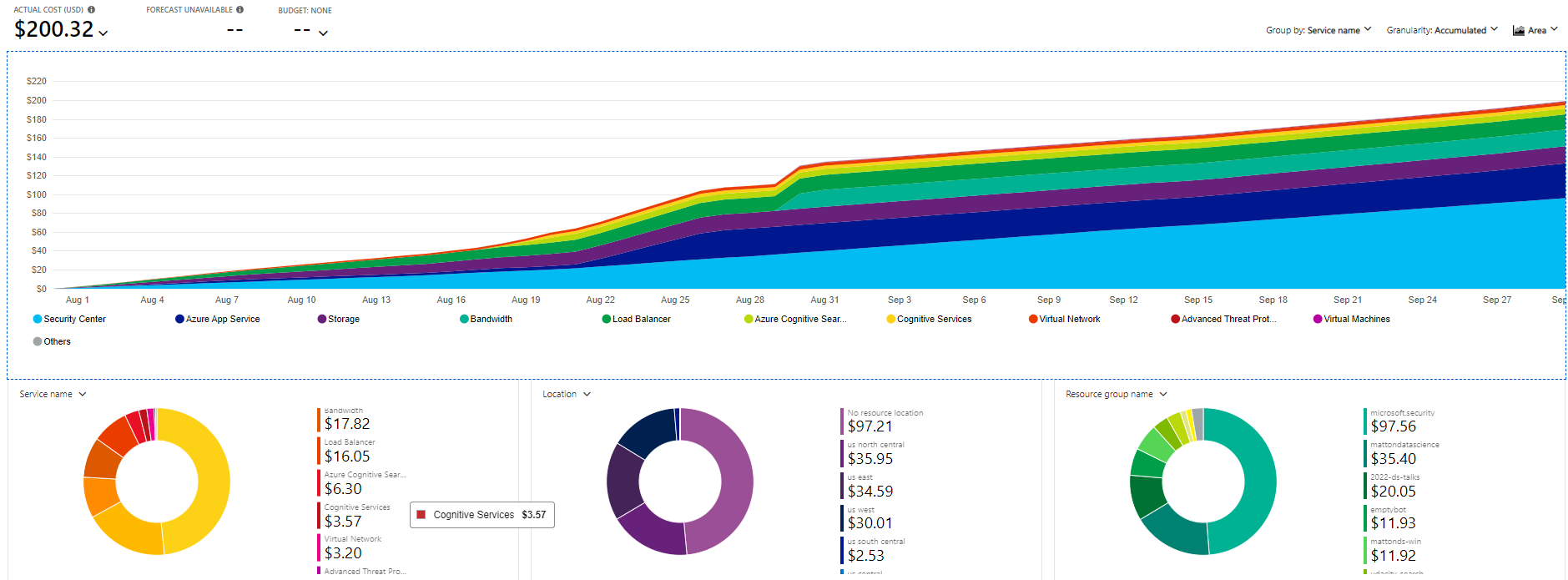

Monitoring can be done manually using Azure Cost Analysis, and I also recommend using budgets and alerts to help prevent unexpected cost overruns.

I recommend ensuring all your pricing expectations are met during low-scale prototyping and development and then relying heavily on cost analysis, alerts, and possibly budgets as you scale up your usage.

Finally, I do recommend you have a separate resource group for each application that uses Azure Cognitive Services so you can more easily get a sense of what applications are incurring the expenses using Azure Cost Analysis.

The exact tips I have will vary based on the type of service within Azure Cognitive Services, but here are a few general-purpose recommendations I have for you that apply to all of Azure Cognitive Services.

One Cognitive Service vs Many Individual Services

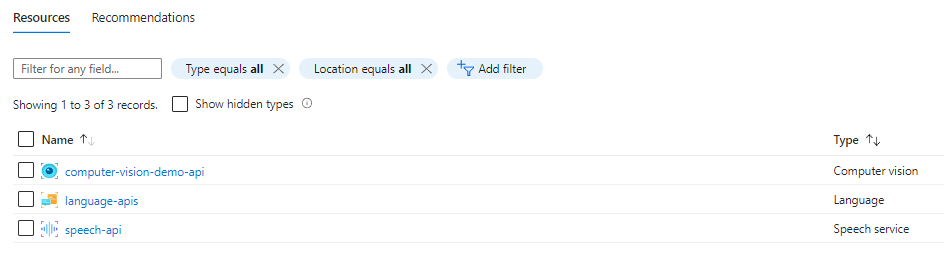

When you provision an Azure Cognitive Services resource, you have a choice between creating a general Cognitive Services instance or an instance of the specific resource you want to use, such as a language service.

At first glance it appears that the Cognitive Services instance is a better choice for developer convenience because you can use that same resource for the wide array of different cognitive services APIs. However, creating separate instances of individual services can have its advantages as well.

By managing separate resources for the individual sub-components of Azure Cognitive Services, you can get a more granular level of detail into where your expenses are flowing and set alerts and budgets at a per-API level instead of at the overall Cognitive Services level.

Additionally, if you desire absolute control of your APIs and do not want developers experimenting with unapproved parts of Azure Cognitive Services, providing individual service resources allows you to restrict the aspects of Azure your developers have access to through Azure Cognitive Services.

Unfortunately, managing individual services also carries some severe productivity penalties because your developers now need to manage multiple keys and URLs instead of a single resource. Additionally, the administrative workload of managing separate resources may be significantly higher than managing a single service.

Vision APIs

Let’s shift gears and talk about computer vision on Azure.

Computer vision is incredibly powerful and encompasses a variety of capabilities including detecting color, recognizing landmarks, brands, and celebrities, detecting objects, generating tags and areas of interest, and even describing the entire image.

When working with Computer Vision it can be tempting to ask Azure Cognitive Service for all of the available information on a given image since it’s all in the same API call, but use caution when doing this. A single request to detect color, landmarks, and tag is actually 3 separate transactions in terms of billing.

As a result, I strongly recommend you reduce your vision workloads to the minimum amount of information your application needs to do its job.

In terms of performance, it’s important to be aware that the more tasks you have Azure Cognitive Services perform, the longer the API call will take. This is particularly true for complex tasks like detecting the bounding boxes of every object in an image.

If your application would use Azure Cognitive Services heavily, you have a large number of images available on hand, and your images are generally similar to each other, it may make financial sense to investigate training your own image classification model and deploying that solution instead of working with Azure’s. However, most of the time the broad flexibility of Azure’s models plus the affordable pricing makes training and maintaining your own models cost-prohibitive.

Face APIs

Unlike the other aspects of Azure Cognitive Services, the facial APIs can be a sensitive topic for many due to the potential for misuse.

The facial APIs in Azure are becoming more restricted as time goes on to prevent misuse and in response to ethical concerns from the community. This means that established features have been phased out and classes of users such as government and law enforcement agencies are prohibited from facial APIs.

The pricing of facial APIs is very similar to that of computer vision in general, but you tend to hit the cheaper bulk rates sooner with content moderation.

Because of the sensitivity of facial APIs, I’d encourage you to only use these APIs when they’re critical to your application’s core functionality or you may find yourself using APIs that become phased out or releasing new features that alarm your users instead of delighting them.

Content Moderation

Content Moderation is built around flagging objectionable content in images.

The pricing of content moderation follows a very similar curve to the face APIs, but the prices are slightly cheaper between 1 and 5 million transactions range.

It may be tempting to try to build your own image classification model to reduce the need for a professional content moderation service, but I strongly recommend against this due to the nature of the images you are training your models to detect, including disturbingly graphic scenes and actions involving minors.

Unless content moderation is your organization’s main product, it is very likely not worth the personal cost to your team to have them attempt to roll your own models when a ready-made solution is already available in Azure.

Speech APIs

Let’s move out of the visual content and get into the audio content.

The text-to-speech and speech-to-text APIs both revolve around the length of the audio file or length of the text you are converting. This means that transcribing short pieces of audio (such as a few seconds of a user talking to a computer) and generating speech output for short responses is going to be fairly affordable.

As an anecdote, I ran a conference talk entitled “Automating My Dog with Azure Cognitive Services” that used speech-to-text, text-to-speech, language APIs, and vision APIs. I was constantly talking to this application as I developed it, and yet my total costs for all cognitive services usage was 12 cents for the entire month.

However, if you have more than a single user using your system and your workload is heavy, your costs will certainly be higher, but as long as the spoken text and generated replies are short, the speech APIs tend to be extremely affordable, in my experience.

While I’m talking about speech, I should note that with text-to-speech you must select one of many different supported voices. Some of these profiles are neural voices that are designed to be more authentic and life-like. These advanced voices cost a bit more than non-neural voices due to the additional work Azure does during speech synthesis.

If you don’t need the quality of your generated voice to be believable, it’s cheaper to use a non-neural voice model, but I do not recommend it given the significant quality improvement of neural voices.

It is possible to build custom neural voice models for text-to-speech, with approval from Microsoft. If you go this route, your prices will be significantly more expensive for training, hosting, and generating responses using these custom neural models. I very strongly recommend against this approach unless it is critical for your organization that the voice must sound unique or like a specific individual.

Finally, with text-to-speech, if you find your application frequently responds with the same words, it may be a good idea to pre-generate audio responses by determining your common replies, calling out to the Azure Cognitive Services APIs, and saving the response as a .wav file.

If you find your application needing to reply with the same words in the future, you can always load the .wav file and play the audio locally instead of calling out to Azure Cognitive Services. This can be particularly helpful with canned responses like “I don’t understand” or “I’m having trouble communicating right now” that you want to be available at all times including when the user’s internet connection is unstable.

Translation APIs

Similar to speech, translation is paid for by the length of the text, with current pricing being at $10 USD per million characters. At that pricing level, it would cost me roughly 14 cents to translate my article on Azure Machine Learning pricing tips.

Many uses of the translation API will be manually triggered for specific content you know you want translated, but if you find yourself writing code to automatically call the translation API, make sure you are not repeatedly translating the same translation task. I would recommend storing the results of any translation task so that you do not need to repeat it in the future.

Text Analysis APIs

Like the vision APIs, there are a large number of different parts of the text analysis APIs, but these APIs typically are individually called so we don’t have the same price confusion points that are common with the vision APIs.

Tasks like sentiment analysis, key phrase extraction, language detection, named entity recognition, and PII detection are all billed at fractions of a penny per request.

This means that the common strategy to avoid calling the API twice with the same data and caching the results of a call still applies here.

Like the vision APIs, the more you call a text analysis API the cheaper those APIs get, to the point where it can cost you 25 cents for a thousand requests if you’re making over ten million text requests.

My opinion on the pricing of text analysis on Azure depends on the task. If you are using complex things like key phrase extraction or named entity recognition, I find text analytics to be absolutely worth using even at large scale.

However, if you are sending millions of requests through Azure Cognitive Services for sentiment analysis, I would likely be tempted to use local compute resources and a locally trained machine learning model to predict the sentiment value of text. Keep in mind that Microsoft’s models are being improved constantly and have been trained on a very large breadth of inputs and likely will perform more accurately than anything you train yourself.

Language Understanding APIs

Finally, the language understanding APIs such as LUIS and CLU exist to let you classify user utterances to matched intents.

The language understanding APIs are billed at a per-request level, though the pricing is more expensive than the other language APIs at $1.50 USD per thousand requests at the time of this writing for East US.

That being said, the classification models that conversational language understanding uses and ease of training and deploying these models using Azure is typically worth the price.

You can mitigate these costs to some extent by caching results of common requests and skipping calling to Azure Cognitive Services, though this is less feasible since there are so many different ways for a person to ask the same question.

In extreme cases you could consider training your own multi-class classification model using ML.NET or scikit-learn, but you would need to manage the training and deployment of such a model yourself.

Additionally, less accurate heuristic approaches such as Levenshtein distance can be used as a stand-in for the language understanding APIs.

However, with the rise of ChatGPT, people now expect a high degree of accuracy in conversational AI, so cutting corners in language understanding for price improvements is likely to be noticed.

Conclusion

Azure Cognitive Services encompasses a large set of capabilities on Azure that each have their own distinct pricing models.

However, the common theme in Azure Cognitive Services is that you’re paying based on how much of the service you’re actually using, whether that’s in terms of minutes of audio recordings, a count of characters, or simply individual API calls.

A smart caching layer can help reduce your costs by making sure each call to Azure Cognitive Services is distinct and gives you value you’ve not requested before.

Additionally, most everything in Azure Cognitive Services is a form of a highly specialized classification or regression model. If your needs are severe enough, you could always train, evaluate, host, and deploy your own machine learning models to replace aspects of Azure Cognitive Services, but the time, quality, and cost of doing so is usually not worth it except in extreme cases.

For those who are interested in training and deploying their own machine learning models on Azure, check out my Tips for Managing Your Azure Machine Learning Costs article.

Stay tuned for more content as my Azure Spring Clean 2023 series continues covering Azure Bot Service, Azure Cognitive Search, and OpenAI on Azure.