Detecting Languages with Azure Cognitive Services in C#

Building intelligent apps using Azure Cognitive Services Language Detection

Language detection is a small part of Azure Cognitive Services that allows you to send a string to Azure and get back a language prediction and confidence score using the Azure Cognitive Services API. In this article we’ll cover how to do that from C# using the Azure SDK.

In order to get the most out of this article you should understand:

- The basics of Azure Cognitive Services

- The basics of C# programming

- How to add a package reference using NuGet

As we go, I’ve linked to other articles that will help fill out your learning journey on some of these other concepts.

Adding a Reference to the Azure Cognitive Services Language SDK

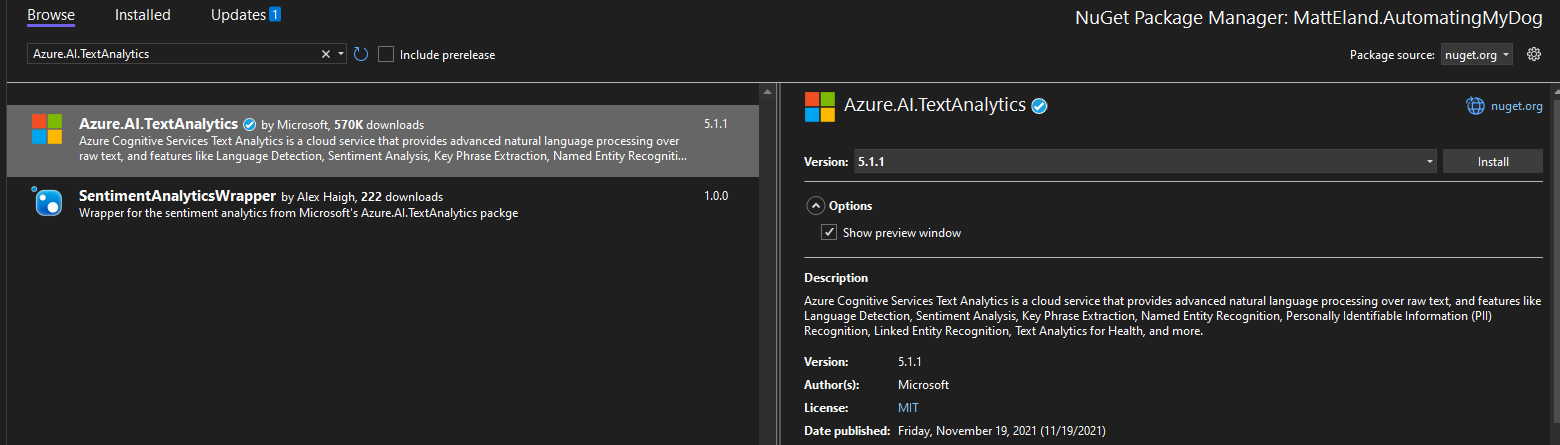

In order to work with Azure Cognitive Services, we need to install the latest version of the Azure.AI.TextAnalytics package using NuGet package manager.

Caution: do not use

Microsoft.Azure.CognitiveServices.Language.TextAnalytics. This is the old version of this library and it has some bugs around language detection.

See Microsoft’s documentation on NuGet package manager for additional instructions on adding a package reference.

Note: Adding the package can also be done in the .NET CLI with

dotnet add package Azure.AI.TextAnalytics.

Creating a TextAnalyticsClient Instance

Before we can work with language detection, we’ll need to add some using statements to the top of our C# file:

using Azure;

using Azure.AI.TextAnalytics;

These allow us to use classes in the TextAnalytics namespaces.

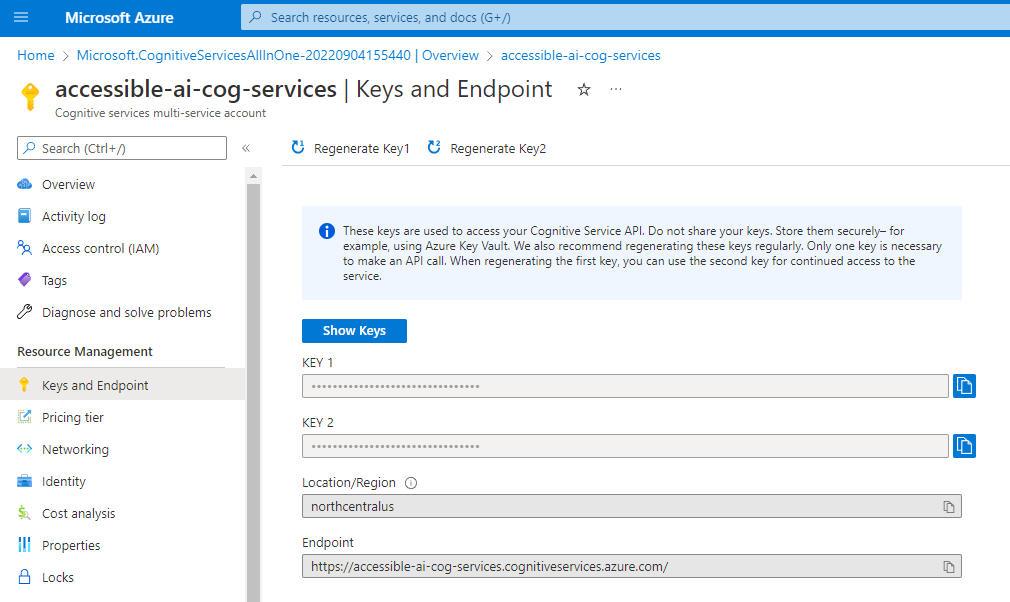

Next, we’ll need a reference to our key and endpoint. These can be found on the Keys and Endpoints blade of your cognitive services instance in the Azure portal.

If you are using a single Azure Cognitive Services instance, you should use one of that service’s keys and its endpoint. If you wanted an isolated service and created a stand-alone Language Service, you would use that service’s key and endpoint instead.

// These values should come from a config file and should NOT be stored in source control

string key = "YourKeyGoesHere";

Uri endpoint = new Uri("https://YourCogServicesUrl.cognitiveservices.azure.com/");

Important Security Note: In a real application you should not hard-code your cognitive services key in your source code or check it into source control. Instead, you should get this key from a non-versioned configuration file via

IConfigurationor similar mechanisms. Checking in keys can lead to people discovering sensitive credentials via your current source or your source control history and potentially using them to perform their own analysis at your expense.

Next, we’ll need to authenticate with a TextAnalyticsClient. This object will handle all communications with Azure Cognitive Services later on.

// Create the TextAnalyticsClient and set its endpoint

AzureKeyCredential credentials = new AzureKeyCredential(key);

TextAnalyticsClient textClient = new TextAnalyticsClient(endpoint, credentials);

With the textClient created and configured, we’re ready to start making calls out to detect language.

Detecting Languages with the Azure Cognitive Services SDK

Before we can detect language, we get some text from the user. In a console application, this might be as simple as reading it from Console.ReadLine:

Console.WriteLine("Enter a phrase to detect language:");

string text = Console.ReadLine();

Once we have that text, we need to analyze it. We do this by calling out to the DetectLanguage or DetectLanguageAsync method which will communicate with our Azure Cognitive Services instance and interpret the response.

// Detect the language the of the string the user typed in

Response<DetectedLanguage> response = textClient.DetectLanguage(text);

// Extract the language from the response

DetectedLanguage lang = response.Value;

Note: In this section I’m including details on the REST request for those curious. You don’t need to worry about these messages if you are using the Azure SDK since that takes care of the requests and responses for you.

This sends a REST POST request to https://YourCogServicesUrl.cognitiveservices.azure.com/text/analytics/v3.1/languages.

The request also includes a Ocp-Apim-Subscription-Key header containing your cognitive services key. This is how Azure knows to allow the request.

The body of that REST request looks something like the following JSON:

{

"documents": [

{

"id":"0",

"text":"Hello there",

"countryHint":"us"

}

]

}

Azure Cognitive Services processes that, checks my authentication token in the message headers, and then sends back the following response:

{

"documents":[

{

"id":"0",

"detectedLanguage": {

"name":"English",

"iso6391Name":"en",

"confidenceScore":1.0

},

"warnings":[]

}

],

"errors":[],

"modelVersion":"2021-11-20"

}

The Azure SDK converts this response automatically into a LanguageResult instance which we can then use to display these results:

Console.WriteLine();

Console.WriteLine("Detected Language:");

Console.WriteLine($"{lang.Name} (ISO Code: {lang.Iso6391Name}): {lang.ConfidenceScore:P}");

Here ConfidenceScore is a value from 0 to 1 with 1 being 100 % confidence in that language prediction.

This will print out something like the following for an English phrase:

Enter a phrase to detect language:

My hovercraft is full of eels.

Detected Languages:

English (ISO Code: en): 90.00%

It will display the following for a French phrase:

Enter a phrase to detect language:

Je suis une pomme de terre.

Detected Languages:

French (ISO Code: fr): 93.00%

One note here is that you cannot get back anything other than a single language. Mixing languages together will only result in the dominant language and a reduced ConfidenceScore.

Ultimately language detection gives us a cheap, fast, and intuitive way of detecting the dominant language of a user’s input. We can then take that information and route the user appropriately to localized resources.