Connecting to an Azure Workspace from the Python SDK

Use the Azure ML Python SDK to connect to Azure Machine Learning Studio

In this article we’ll explore several ways of connecting to an Azure Machine Learning Studio Workspace from Python code using the Azure Machine Learning SDK for Python as well as some of the things you can do with that workspace after connecting.

Note: this article assumes you have already installed the Azure Machine Learning SDK for Python. Please see Microsoft’s installation guide for current installation instructions.

Connecting to Azure Machine Learning Studio

In order to connect to a workspace, the workspace must first already exist, so see my article on creating an Azure Machine Learning Workspace if you need to.

Once you have a Workspace created, you’ll now need three things for the SDK:

- Your Azure Subscription ID

- The Resource Group the Workspace is in

- The name of the Workspace

These are the three basic things you need to connect to a workspace in Azure, and there are two ways of using them.

Connecting with Raw Credentials

Although I do not recommend this approach, it is possible to manually connect to a workspace by providing the necessary information as strings to the Workspace constructor:

from azureml.core import Workspace

subscription_id = 'some-id-goes-here'

resource_group = 'my-resource-group-name'

workspace_name = 'MattOnDataScience'

ws = Workspace(subscription_id, resource_group, workspace_name)

If you don’t have a resource group yet, you can also create one with code similar to the following:

from azureml.core import Workspace

subscription_id = 'some-id-goes-here'

resource_group = 'my-resource-group-name'

workspace_name = 'MattOnDataScience'

resource_group_location = 'eastus2'

ws = Workspace(subscription_id,

resource_group,

workspace_name,

create_resource_group=True,

location=resource_group_location)

This way works, and it is has the benefit of being very clear in which workspace you are connecting to, but the major downsides here are that your workspace information is stored in code which is likely tracked in version control and it becomes harder to use the same code to affect multiple workspaces.

Connecting with a Config.json File

The second way to connect to a workspace is the one I personally recommend, and that is to use a config.json file to represent your workspace. This file lives in the same directory as your Python code and looks something like the following JSON file:

{

"subscription_id": "some-id-goes-here",

"resource_group": "my-resource-group-name",

"workspace_name": "MattOnDataScience"

}

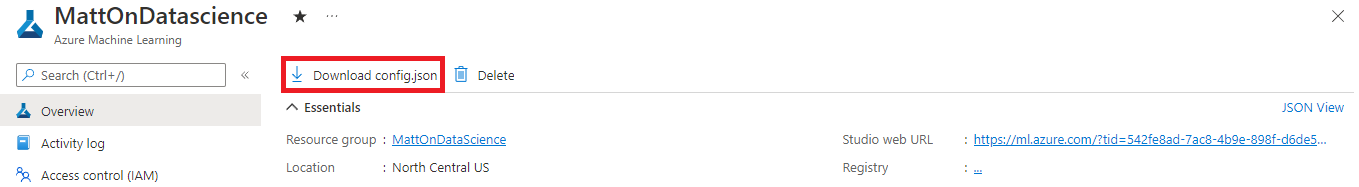

While this is a fairly simple file, you don’t need to create one yourself. Instead, navigate into your Machine Learning instance in the Azure Portal and click the Download config.json link in the upper left corner as pictured below:

This will download a completed config.json file that does not require any modifications.

Next, you can connect to the workspace in Python via the following code:

from azureml.core import Workspace

# Connect to the workspace (requires config.json to be present)

ws = Workspace.from_config()

Azure Subscription IDs can be considered sensitive information so you may want to add the config.json file to your .gitignore file and not track the file in version control if storing your code in a publicly-visible repository.

A nice benefit to the config.json approach is that if you need to do a data science experiment in a different workspace, this can be as simple as changing which config.json file you’re using without any code changes needed.

What exactly is a Workspace?

Okay, so now we have a Workspace instance. What exactly is it?

Well, in the Azure ML Python SDK, Workspaces are the central object you use to get other objects, track experiments, and otherwise interact with Azure.

To see some basic details about a workspace in Python, you could run the following code:

# Display high-level info on the workspace

print('Workspace name: ' + ws.name,

'Azure region: ' + ws.location,

'Resource group: ' + ws.resource_group, sep = '\n')

If you need additional details on your workspace, you can call the get_details() method to get a dict containing many key-value pairs on resources associated with the workspace. Those could be displayed in Python with the following code:

details = ws.get_details()

print(details)

What do I do with a Workspace?

Once you have access to your workspace, there are many things you can do from the SDK, including:

- Run a machine learning experiment

- Register a data set

- Register a trained model

- Load registered data sets

- Load registered models

- Download a trained model’s files for use outside of Azure

- Create compute resources

- Deploy trained models as endpoints

- View past experiment runs

- View model details, metrics, and explanations

Just to show you how simple it can be to work with a Workspace, the following code lists the name of all experiments (now called Jobs) that you have run on your workspace:

for experiment in ws.experiments:

print(experiment)

Another common task you may wind up doing with a Workspace is to get the blob storage container that acts as the default datastore for that workspace. This blob stores datasets, trained models, logs, and more and can be accessed via the following code:

# The default datastore is a blob storage container where datasets are stored

datastore = ws.get_default_datastore()

print('Default data store: ' + datastore.name)

As you can see, there’s not too much to connecting to a workspace, but this is a vital step in the process of working with machine learning on Azure via the Python SDK.

Let me know which aspects of the Azure ML Python SDK interest you the most and I’ll try to prioritize those pieces of content!